Gen-ai 简明教程

CycleGAN and StyleGAN

阅读本章以了解 CycleGAN 和 StyleGAN,以及它们在生成和转换图像方面如何因其显着的性能而脱颖而出。

Read this chapter to understand CycleGAN and StyleGAN and how they stand out for their remarkable capabilities in generating and transforming images.

What is Cycle Generative Adversarial Network?

CycleGAN,或简称 Cycle-Consistent 将对抗网络,是一种 GAN 框架,旨在将一幅图像的特征转移到另一幅图像上。换句话说,CycleGAN 是为不成对的图像到图像翻译任务设计的,其中输入和输出图像之间没有关系。

CycleGAN, or in short Cycle-Consistent Generative Adversarial Network, is a kind of GAN framework that is designed to transfer the characteristics of one image to another. In other words, CycleGAN is designed for unpaired image-to-image translation tasks where there is no relation between the input and output images.

与需要成对训练数据的传统 GAN 相反,CycleGAN 可以在没有任何监督的情况下学习两个不同域之间的映射。

In contrast to traditional GANs that require paired training data, CycleGAN can learn mappings between two different domains without any supervision.

How does a CycleGAN Work?

CycleGAN 的工作原理在于它将问题视为图像重建问题。让我们了解它是如何工作的 −

The working of CycleGAN lies in the fact that it treats the problem as an image reconstruction problem. Let’s understand how it works −

-

The CycleGAN first takes an image input, say, "X". It then uses generator, say, "G" to convert input image into the reconstructed image.

-

Once reconstruction is done, it reverses the process of reconstructed image to the original image with the help of another generator, say, "F".

Architecture of CycleGAN

与传统 GAN 一样,CycleGAN 也有两个部分——生成器和判别器。但除了这两个组件外,CycleGAN 还引入了循环一致性的概念。让我们详细了解 CycleGAN 的这些组件 −

Like a traditional GAN, CycleGAN also has two parts-a generator and a Discriminator. But along with these two components, CycleGAN introduces the concept of cycle consistency. Let’s understand these components of CycleGAN in detail −

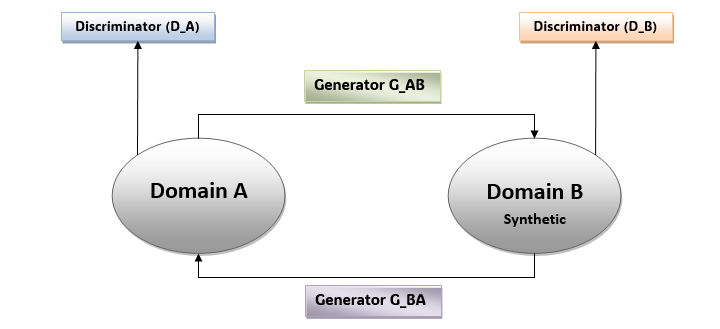

Generator Networks (G_AB and G_BA)

CycleGAN 有两个生成器网络,分别是 G_AB 和 G_BA。这些生成器将图像从域 A 翻译到域 B,反之亦然。它们负责最小化原始图像和翻译图像之间的重建误差。

CycleGAN have two generator networks say, G_AB and G_BA. These generators translate images from domain A to domain B and vice versa. They are responsible for minimizing the reconstruction error between the original and translated images.

Discriminator Networks (D_A and D_B)

CycleGAN 还有两个判别器网络,分别是 D_A 和 D_B。这些判别器分别区分域 A 和 B 中的真实图像和翻译图像。它们负责使用对抗损失提高生成图像的真实性。

CycleGAN have two discriminator networks say, D_A and D_B. These discriminators distinguish between real and translated images in domain A and B respectively. They are responsible for improving the realism of the generated images using adversarial loss.

Cycle Consistency Loss

CycleGAN 引入了第三个组件,称为循环一致性损失。它在域 A 和 B 中确保了真实图像和翻译图像之间的一致性。借助循环一致性损失,生成器学习两个域之间的有意义的映射,并确保生成图像的真实性。

CycleGAN introduces a third component called Cycle Consistency Loss. It enforces consistency between the real and translated images across both domains A and B. With the help of Cycle Consistency Loss, the generators learn meaningful mappings between both the domains and ensure realism of the generated images.

以下是 CycleGAN 的示意图 −

Given below is the schematic diagram of a CycleGAN −

Applications of CycleGAN

CycleGAN 在各种图像到图像翻译任务中找到了它的应用,包括以下内容 −

CycleGAN finds its applications in various image-to-image translation tasks, including the following −

-

Style Transfer − CycleGAN can be used for transferring the style of images between different domains. It includes converting photos to paintings, day scenes to night scenes, and aerial photos to maps, etc.

-

Domain Adaptation − CycleGAN can be used for adapting models trained on synthetic data to real-world data. It increases generalization and performance in various tasks like object detection and semantic segmentation.

-

Image Enhancement − CycleGAN can be used for enhancing the quality of images by removing artifacts, adjusting colors, and improving visual aesthetics.

What is Style Generative Adversarial Network?

StyleGAN,全称为 Style Generative Adversarial Network(风格生成对抗网络),是一种由英伟达开发的 GAN 框架。StyleGAN 专门用于生成逼真的高质量图像。

StyleGAN, or in short Style Generative Adversarial Network, is a kind of GAN framework developed by NVIDIA. StyleGAN is specifically designed for generating photo-realistic high-quality images.

与传统的 GAN 相比,StyleGAN 引入了某些创新技术,以改善图像合成,并且能够更好地控制具体属性。

In contrast to traditional GANs, StyleGAN introduced some innovative techniques for improved image synthesis and have some better control over specific attributes.

Architecture of StyleGAN

StyleGAN 使用传统的渐进式 GAN 架构,并在此基础上对生成器部分进行了一些修改。判别器部分几乎与传统的渐进式 GAN 相同。我们了解一下 StyleGAN 架构的不同之处 −

StyleGAN uses the traditional progressive GAN architecture and along with that, it proposed some modifications in its generator part. The discriminator part is almost like the traditional progressive GAN. Let’s understand how the StyleGAN architecture is different −

Progressive Growing

与传统的 GAN 相比,StyleGAN 使用渐进式增长策略,在此策略的帮助下,生成器和判别器网络在训练期间逐渐增加大小和复杂性。该渐进式增长使 StyleGAN 能够生成更高分辨率(高达 1024x1024 像素)的图像。

In comparison with traditional GAN, StyleGAN uses a progressive growing strategy with the help of which the generator and discriminator networks are gradually increased in size and complexity during training. This Progressive growing allows StyleGAN to generate images of higher resolution (up to 1024x1024 pixels).

Mapping Network

为了控制生成图像的风格和外观,StyleGAN 使用映射网络。此映射网络将输入潜在空间向量转换为中间潜在向量。

To control the style and appearance of the generated images, StyleGAN uses a mapping network. This mapping network converts the input latent space vectors into intermediate latent vectors.

Synthesis Network

StyleGAN 还包含一个合成网络,该网络获取映射网络生成的中间潜在向量并生成最终图像输出。该合成网络由一系列具有自适应实例归一化的卷积层组成,使模型能够生成具有小细节的高质量图像。

StyleGAN also incorporates a synthesis network that takes the intermediate latent vectors produced by the mapping network and generates the final image output. The synthesis network, consisting of a series of convolutional layers with adaptive instance normalization, enables the model to generate high-quality images with small details.

Style Mixing Regularization

StyleGAN 还引入了训练期间的风格混合正则化,使模型能够从多个潜在向量中组合不同的风格。风格混合正则化的优点在于它增强了生成输出图像的真实感。

StyleGAN also introduces style mixing regularization during training that allows the model to combine different styles from multiple latent vectors. The advantage of style mixing regularization is that it enhances the realism of the generated output images.

Applications of StyleGAN

StyleGAN 在各种领域中都有应用,包括以下领域 −

StyleGAN finds its application in various domains, including the following −

Artistic Rendering

由于可以更好地控制年龄、性别和面部表情等具体属性,因此 StyleGAN 可用于创建逼真的肖像画、艺术品和其他类型的图像。

Due to having some better control over specific attributes like age, gender, and facial expression, StyleGAN can be used to create realistic portraits, artwork, and other kinds of images.

Conclusion

在本章中,我们解释了传统生成对抗网络的两种不同变体,即 CycleGAN 和 StyleGAN。

In this chapter, we explained two diverse variants of the traditional Generative Adversarial Networks, namely CycleGAN and StyleGAN.

CycleGAN 旨在解决成对图像到图像转换任务,其中输入图像和输出图像之间没有关系,而 StyleGAN 专门设计用于生成逼真的高质量图像。

While CycleGAN is designed for unpaired image-to-image translation tasks where there is no relation between the input and output images, StyleGAN is specifically designed for generating photo-realistic high-quality images.

了解 CycleGAN 和 StyleGAN 背后的架构和创新为我们洞悉它们创建逼真输出图像的潜力提供了机会。

Understanding the architectures and innovations behind both CycleGAN and StyleGAN provides us with an insight into their potential to create realistic output images.