Data Science 简明教程

Data Science - Getting Started

数据科学是从数据中提取和分析有用信息以解决难以通过分析解决的问题的过程。例如,当你访问一个电子商务网站,在购买之前查看一些类别和产品时,你正在创建分析师可以用来弄清楚你是如何进行购买的数据。

Data Science is the process of extracting and analysing useful information from data to solve problems that are difficult to solve analytically. For example, when you visit an e-commerce site and look at a few categories and products before making a purchase, you are creating data that Analysts can use to figure out how you make purchases.

它涉及不同的学科,例如数学和统计建模,从其来源中提取数据和应用数据可视化技术。它还涉及处理大数据技术以收集结构化和非结构化数据。

It involves different disciplines like mathematical and statistical modelling, extracting data from its source and applying data visualization techniques. It also involves handling big data technologies to gather both structured and unstructured data.

它可以帮助你找到隐藏在原始数据中的模式。术语“数据科学”已经演变,因为数学统计、数据分析和“大数据”已经随着时间而改变。

It helps you find patterns that are hidden in the raw data. The term "Data Science" has evolved because mathematical statistics, data analysis, and "big data" have changed over time.

数据科学是一个跨学科领域,它让你可以从有组织和无组织的数据中学习。利用数据科学,你可以将业务问题转化为研究项目,然后将其应用到实际解决方案中。

Data Science is an interdisciplinary field that lets you learn from both organised and unorganised data. With data science, you can turn a business problem into a research project and then apply into a real-world solution.

History of Data Science

约翰·图基在 1962 年使用术语“数据分析”来定义一个类似于当前现代数据科学的领域。在 1985 年对北京中国科学院的演讲中,C·F·杰夫·吴首次将短语“数据科学”作为统计的替代词。随后,在 1992 年于蒙彼利埃第二大学举办的会议上,从事统计工作的参与者认识到一个以多种来源和形式的数据为中心的新领域诞生,将统计和数据分析的已知思想和原则与计算机相结合。

John Tukey used the term "data analysis" in 1962 to define a field that resembled current modern data science. In a 1985 lecture to the Chinese Academy of Sciences in Beijing, C. F. Jeff Wu introduced the phrase "Data Science" as an alternative word for statistics for the first time. Subsequently, conference held at the University of Montpellier II in 1992 participants at a statistics recognised the birth of a new field centred on data of many sources and forms, integrating known ideas and principles of statistics and data analysis with computers.

彼得·诺尔在 1974 年建议将“数据科学”作为计算机科学的替代名称。国际分类学会联合会是第一个将数据科学作为专门主题予以突出的会议,是在 1996 年。然而,这个概念仍然在变化中。继在北京中国科学院的 1985 年的演讲后,C·F·杰夫·吴再次倡导在 1997 年将统计学更名为数据科学。他的理由是,一个新名称将有助于统计学摆脱不准确的刻板印象和观念,例如与会计有关或仅限于数据描述。林知己在 1998 年提出数据科学是一个包含数据设计、数据收集和数据分析三个组成部分的新型多学科概念。

Peter Naur suggested the phrase "Data Science" as an alternative name for computer science in 1974. The International Federation of Classification Societies was the first conference to highlight Data Science as a special subject in 1996. Yet, the concept remained in change. Following the 1985 lecture at the Chinese Academy of Sciences in Beijing, C. F. Jeff Wu again advocated for the renaming of statistics to Data Science in 1997. He reasoned that a new name would assist statistics in inaccurate stereotypes and perceptions, such as being associated with accounting or confined to data description. Hayashi Chikio proposed Data Science in 1998 as a new, multidisciplinary concept with three components: data design, data collecting, and data analysis.

在 20 世纪 90 年代,“知识发现”和“数据挖掘”是识别因数据集不断增长而产生的模式的过程的流行短语。

In the 1990s, "knowledge discovery" and "data mining" were popular phrases for the process of identifying patterns in datasets that were growing in size.

在 2012 年,工程师托马斯·H·戴文波特和 DJ·帕蒂尔宣称“数据科学家:21 世纪最热门的工作。”这个术语被纽约时报和波士顿环球报等主要都市出版物所采用。十年后,他们又重复了这一点,并补充说“这个职位的需求比以往任何时候都大。”

In 2012, engineers Thomas H. Davenport and DJ Patil proclaimed "Data Scientist: The Hottest Job of the 21st Century," a term that was taken up by major metropolitan publications such as the New York Times and the Boston Globe. They repeated it a decade later, adding that "the position is in more demand than ever"

威廉·S·克利夫兰经常与数据科学作为独立领域的当前理解联系在一起。在 2001 年的一项研究中,他主张将统计学发展为技术领域;由于这将从根本上改变科目,因此需要一个新名称。在随后的几年中,“数据科学”变得越来越流行。在 2002 年,科学技术数据委员会出版了《数据科学杂志》。哥伦比亚大学于 2003 年创办了《数据科学杂志》。美国统计协会的统计学习和数据挖掘部分在 2014 年更名为统计学习和数据科学部分,反映了数据科学越来越受欢迎。

William S. Cleveland is frequently associated with the present understanding of Data Science as a separate field. In a 2001 study, he argued for the development of statistics into technological fields; a new name was required as this would fundamentally alter the subject. In the following years, "Data Science" grew increasingly prevalent. In 2002, the Council on Data for Science and Technology published Data Science Journal. Columbia University established The Journal of Data Science in 2003. The Section on Statistical Learning and Data Mining of the American Statistical Association changed its name to the Section on Statistical Learning and Data Science in 2014, reflecting the growing popularity of Data Science.

在 2008 年,DJ·帕蒂尔和杰夫·哈默巴赫获得了“数据科学家”的专业资格。尽管国家科学委员会在他们 2005 年的研究“长期数字数据收集:支持 21 世纪的研究和教学”中使用了这个术语,但它指的是在管理数字数据收集方面任何重要的角色。

In 2008, DJ Patil and Jeff Hammerbacher were given the professional designation of "data scientist." Although it was used by the National Science Board in their 2005 study "Long-Lived Digital Data Collections: Supporting Research and Teaching in the 21st Century," it referred to any significant role in administering a digital data collection.

对于“数据科学”的含义尚未达成共识,而且一些人认为它是一个流行语。大数据在营销中是一个类似的概念。数据科学家负责将海量数据转化为有用信息,并开发软件和算法,以帮助企业和机构确定最佳运营。

An agreement has not yet been reached on the meaning of Data Science, and some believe it to be a buzzword. Big data is a similar concept in marketing. Data scientists are responsible for transforming massive amounts of data into useful information and developing software and algorithms that assist businesses and organisations in determining optimum operations.

Why Data Science?

据 IDC 称,到 2025 年,全球数据将达到 175 泽字节。数据科学帮助企业了解来自不同来源的海量数据,提取有用的见解,并做出更好的数据驱动决策。数据科学广泛应用于多个工业领域,例如营销、医疗保健、金融、银行和政策制定。

According to IDC, worldwide data will reach 175 zettabytes by 2025. Data Science helps businesses to comprehend vast amounts of data from different sources, extract useful insights, and make better data-driven choices. Data Science is used extensively in several industrial fields, such as marketing, healthcare, finance, banking, and policy work.

以下是使用数据分析技术的重要优势:-

Here are significant advantages of using Data Analytics Technology −

-

Data is the oil of the modern age. With the proper tools, technologies, and algorithms, we can leverage data to create a unique competitive edge.

-

Data Science may assist in detecting fraud using sophisticated machine learning techniques.

-

It helps you avoid severe financial losses.

-

Enables the development of intelligent machines

-

You may use sentiment analysis to determine the brand loyalty of your customers. This helps you to make better and quicker choices.

-

It enables you to propose the appropriate product to the appropriate consumer in order to grow your company.

Need for Data Science

The data we have and how much data we generate

根据福布斯的报道,2010 至 2020 年间,全球产生的、复制的、记录的和消耗的总数据量激增约 5,000%,从 1.2 万亿千兆字节增长到 59 万亿千兆字节。

According to Forbes, the total quantity of data generated, copied, recorded, and consumed in the globe surged by about 5,000% between 2010 and 2020, from 1.2 trillion gigabytes to 59 trillion gigabytes.

How companies have benefited from Data Science?

-

Several businesses are undergoing data transformation (converting their IT architecture to one that supports Data Science), there are data boot camps around, etc. Indeed, there is a straightforward explanation for this: Data Science provides valuable insights.

-

Companies are being outcompeted by firms that make judgments based on data. For example, the Ford organization in 2006, had a loss of $12.6 billion. Following the defeat, they hired a senior data scientist to manage the data and undertook a three-year makeover. This ultimately resulted in the sale of almost 2,300,000 automobiles and earned a profit for 2009 as a whole.

Demand and Average Salary of a Data Scientist

-

According to India Today, India is the second biggest centre for Data Science in the world due to the fast digitalization of companies and services. By 2026, analysts anticipate that the nation will have more than 11 million employment opportunities. In fact, recruiting in the Data Science field has surged by 46% since 2019.

-

Bank of America was one of the first financial institutions to provide mobile banking to its consumers a decade ago. Recently, the Bank of America introduced Erica, its first virtual financial assistant. It is regarded the as best financial invention in the world. Erica now serves as a client adviser for more than 45 million consumers worldwide. Erica uses Voice Recognition to receive client feedback, which represents a technical development in Data Science.

-

The Data Science and Machine Learning curves are steep. Although India sees a massive influx of data scientists each year, relatively few possess the needed skill set and specialization. As a consequence, people with specialised data skills are in great demand.

Impact of Data Science

数据科学对现代文明的各个方面产生了重大影响。数据科学对组织的重要性不断提高。根据一项调查,到 2023 年,数据科学的全球市场将达到 1150 亿美元。

Data Science has had a significant influence on several aspects of modern civilization. The significance of Data Science to organisations keeps on increasing. According to one research, the worldwide market for Data Science would reach $115 billion by 2023.

医疗保健行业受益于数据科学的兴起。2008 年,谷歌员工意识到他们可以实时监控流感毒株。以前的只能每周提供一次实例更新。谷歌能够利用数据科学构建出首批用于监测疾病传播的系统。

Healthcare industry has benefited from the rise of Data Science. In 2008, Google employees realised that they could monitor influenza strains in real time. Previous technologies could only provide weekly updates on instances. Google was able to build one of the first systems for monitoring the spread of diseases by using Data Science.

体育产业也同样受益于数据科学。2019 年,一位数据科学家找到了方法来衡量和计算进球尝试如何增加足球队的取胜几率。事实上,数据科学被用于轻松计算多种体育项目的统计数据。

The sports sector has similarly profited from data science. A data scientist in 2019 found ways to measure and calculate how goal attempts increase a soccer team’s odds of winning. In reality, data science is utilised to easily compute statistics in several sports.

政府机构也每天都在使用数据科学。全球各国的政府使用数据库监控有关社保、税收以及有关其居民的其他数据的信息。政府对新兴技术的使用不断发展。

Government agencies also use data science on a daily basis. Governments throughout the globe employ databases to monitor information regarding social security, taxes, and other data pertaining to their residents. The government’s usage of emerging technologies continues to develop.

由于互联网已成为人类交流的主要媒介,因此电子商务的普及度也在增加。利用数据科学,在线企业可以监控整个客户体验,包括营销活动、购买和消费者趋势。广告必须是电商企业使用数据科学的最大实例之一。您是否曾在网上搜索过某些内容或访问过电商产品网站,却发现社交网站和博客上充斥着该产品的广告?

Since the Internet has become the primary medium of human communication, the popularity of e-commerce has also grown. With data science, online firms may monitor the whole of the customer experience, including marketing efforts, purchases, and consumer trends. Ads must be one of the greatest instances of eCommerce firms using data science. Have you ever looked for anything online or visited an eCommerce product website, only to be bombarded by advertisements for that product on social networking sites and blogs?

广告像素对于在线收集和分析用户信息至关重要。企业利用在线消费者行为通过因特网重新投放目标消费者。这种对客户信息的利用超出了电子商务的范畴。诸如 Tinder 和 Facebook 等应用使用算法帮助用户找到他们想要找的内容。互联网是一个不断增长的数据宝库,收集和分析数据也将继续增长。

Ad pixels are integral to the online gathering and analysis of user information. Companies leverage online consumer behaviour to retarget prospective consumers throughout the internet. This usage of client information extends beyond eCommerce. Apps such as Tinder and Facebook use algorithms to assist users locate precisely what they are seeking. The Internet is a growing treasure trove of data, and the gathering and analysis of this data will also continue to expand.

Data Science - What is Data?

What is Data in Data Science?

数据是数据科学的基础。数据是对指定字符的系统记录、数量或符号,计算机对此执行操作,这些数据可以存储和传输。它是用于特定目的的数据汇编,例如调查或分析。当数据被结构化时,可以将该数据称为信息。数据源(原始数据、次要数据)也是需要考虑的重要因素。

Data is the foundation of data science. Data is the systematic record of a specified characters, quantity or symbols on which operations are performed by a computer, which may be stored and transmitted. It is a compilation of data to be utilised for a certain purpose, such as a survey or an analysis. When structured, data may be referred to as information. The data source (original data, secondary data) is also an essential consideration.

数据有许多形状和形式,但通常可以认为是某种随机实验的结果——一个无法预先确定结果,但其工作原理仍然受分析约束的实验。来自随机实验的数据通常存储在表格或电子表格中。用来表示变量的统计惯例通常称为特征或列,而将单个项目(或单位)称为行。

Data comes in many shapes and forms, but can generally be thought of as being the result of some random experiment - an experiment whose outcome cannot be determined in advance, but whose workings are still subject to analysis. Data from a random experiment are often stored in a table or spreadsheet. A statistical convention to denote variables is often called as features or columns and individual items (or units) as rows.

Types of Data

主要有两类数据,分别是:

There are mainly two types of data, they are −

Qualitative Data

定性数据由无法计算、量化或仅用数字表示的信息组成。它从文本、音频和图片中收集,并使用数据可视化工具进行分布,包括词云、概念图、图形数据库、时间线和信息图表。

Qualitative data consists of information that cannot be counted, quantified, or expressed simply using numbers. It is gathered from text, audio, and pictures and distributed using data visualization tools, including word clouds, concept maps, graph databases, timelines, and infographics.

定性数据分析的目标是回答有关个人活动和动机的问题。收集和分析此类数据可能需要花费很多时间。处理定性数据的研究员或分析师被称为定性研究员或分析师。

The objective of qualitative data analysis is to answer questions about the activities and motivations of individuals. Collecting, and analyzing this kind of data may be time-consuming. A researcher or analyst that works with qualitative data is referred to as a qualitative researcher or analyst.

定性数据可以为任何部门、用户群体或产品提供重要统计信息。

Qualitative data can give essential statistics for any sector, user group, or product.

Types of Qualitative Data

主要有两类定性数据,分别是:

There are mainly two types of Qualitative data, they are −

@[s0}

Nominal Data

在统计中,名义数据(也称为名义标度)用于指定变量,而不提供数值。它是基本测量标度的最基本类型。与序数数据相反,名义数据无法排序或量化。

In statistics, nominal data (also known as nominal scale) is used to designate variables without giving a numerical value. It is the most basic type of measuring scale. In contrast to ordinal data, nominal data cannot be ordered or quantified.

例如,一个人的姓名、头发颜色、国籍等。让我们假设一位名叫 Aby 的女孩头发是棕色的,她来自美国。

For example, The name of the person, the colour of the hair, nationality, etc. Let’s assume a girl named Aby her hair is brown and she is from America.

名义数据既可以是定性的,也可以是定量的。然而,定量标签(例如识别号)没有任何数值或链接与之关联。相反,可以以名义形式表达多个定性数据类别。这些可能包括单词、字母和符号。个人姓名、性别和国籍是最流行的名义数据实例。

Nominal data may be both qualitative and quantitative. Yet, there is no numerical value or link associated with the quantitative labels (e.g., identification number). In contrast, several qualitative data categories can be expressed in nominal form. These might consist of words, letters, and symbols. Names of individuals, gender, and nationality are some of the most prevalent instances of nominal data.

@[s1}

Analyze Nominal Data

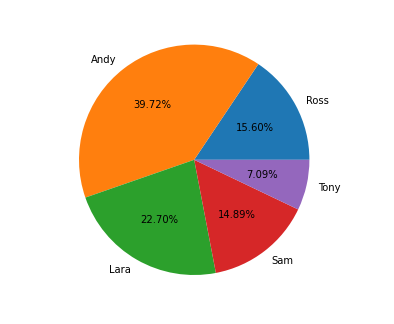

可以使用分组方法分析名义数据。变量可以按组排序,并且可以确定每个类别的频率或百分比。此外还可以以图形方式显示数据,例如使用饼图。

Using the grouping approach, nominal data can be analyzed. The variables may be sorted into groups, and the frequency or percentage can be determined for each category. The data may also be shown graphically, for example using a pie chart.

尽管名义数据不能使用数学运算符处理,但仍可以使用统计技术对其进行研究。假设检验是评估和分析数据的其中一种方法。

Although though nominal data cannot be processed using mathematical operators, they may still be studied using statistical techniques. Hypothesis testing is one approach to assess and analyse the data.

使用名义数据,可以使用卡方检验等非参数检验来检验假设。卡方检验的目的是评估给定值的预测频率和实际频率之间是否存在统计上显着的差异。

With nominal data, nonparametric tests such as the chi-squared test may be used to test hypotheses. The purpose of the chi-squared test is to evaluate whether there is a statistically significant discrepancy between the predicted frequency and the actual frequency of the provided values.

@[s2}

Ordinal Data

序数数据是统计数据中的一种数据类型,其中值按自然顺序排列。序数数据最重要的一点是,您无法区分数据值之间的差异。大多数情况下,数据类别的宽度与底层属性的增量不匹配。

Ordinal data is a type of data in statistics where the values are in a natural order. One of the most important things about ordinal data is that you can’t tell what the differences between the data values are. Most of the time, the width of the data categories doesn’t match the increments of the underlying attribute.

在某些情况下,可以通过对数据的值进行分组来找到间隔数据或比率数据的特征。例如,收入范围是序数数据,而实际收入是比率数据。

In some cases, the characteristics of interval or ratio data can be found by grouping the values of the data. For instance, the ranges of income are ordinal data, while the actual income is ratio data.

序数数据不能像间隔或比率数据一样使用数学运算符进行更改。因此,中位数是找出序数数据集中间位置的唯一方法。

Ordinal data can’t be changed with mathematical operators like interval or ratio data can. Because of this, the median is the only way to figure out where the middle of a set of ordinal data is.

此数据类型广泛存在于金融和经济领域。考虑一下一项研究各个国家 GDP 水平的经济研究。如果该报告根据各个国家的 GDP 对其进行评级,则排名就是序数统计数据。

This data type is widely found in the fields of finance and economics. Consider an economic study that examines the GDP levels of various nations. If the report rates the nations based on their GDP, the rankings are ordinal statistics.

@[s3}

Analyzing Ordinal Data

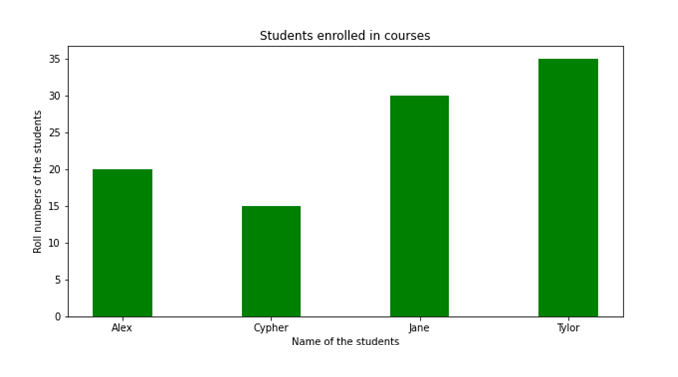

使用可视化工具评估有序数据是最简单的方法。例如,该数据可以显示为一个表格,其中每一行代表一个独立的类别。此外,它们可以使用不同的图表进行图形化表示。条形图是用来显示此类数据最流行的图形样式。

Using visualisation tools to evaluate ordinal data is the easiest method. For example, the data may be displayed as a table where each row represents a separate category. In addition, they may be represented graphically using different charts. The bar chart is the most popular style of graph used to display these types of data.

有序数据也可以使用假设检验等复杂的统计分析方法进行研究。需要注意的是,t 检验和 ANOVA 等参数化过程不能用于这些数据集。只有非参数检验(如曼惠特尼 U 检验或威尔科克森配对检验)可以用来评估关于数据的空假设。

Ordinal data may also be studied using sophisticated statistical analysis methods like hypothesis testing. Note that parametric procedures such as the t-test and ANOVA cannot be used to these data sets. Only nonparametric tests, such as the Mann-Whitney U test or Wilcoxon Matched-Pairs test, may be used to evaluate the null hypothesis about the data.

Qualitative Data Collection Methods

以下是一些收集定性数据的方法和收集方法——

Below are some approaches and collection methods to collect qualitative data −

-

Data Records − Utilizing data that is already existing as the data source is a best technique to do qualitative research. Similar to visiting a library, you may examine books and other reference materials to obtain data that can be utilised for research.

-

Interviews − Personal interviews are one of the most common ways to get deductive data for qualitative research. The interview may be casual and not have a set plan. It is often like a conversation. The interviewer or researcher gets the information straight from the interviewee.

-

Focus Groups − Focus groups are made up of 6 to 10 people who talk to each other. The moderator’s job is to keep an eye on the conversation and direct it based on the focus questions.

-

Case Studies − Case studies are in-depth analyses of an individual or group, with an emphasis on the relationship between developmental characteristics and the environment.

-

Observation − It is a technique where the researcher observes the object and take down transcript notes to find out innate responses and reactions without prompting.

Quantitative Data

定量数据由数值组成,具有数值特征,并且可以对这种类型的数据执行数学运算,例如加法。定量数据由于其定量特性而具有数学可验证性和可评估性。

Quantitative data consists of numerical values, has numerical features, and mathematical operations can be performed on this type of data such as addition. Quantitative data is mathematically verifiable and evaluable due to its quantitative character.

它们数学导出的简单性使得管理不同参数的测量成为可能。通常,它是通过授予人群一部分的问卷调查、民意调查或调查来收集的,用于统计分析。研究人员能够将收集到的研究结果应用于整个人群。

The simplicity of their mathematical derivations makes it possible to govern the measurement of different parameters. Typically, it is gathered for statistical analysis through surveys, polls, or questionnaires given to a subset of a population. Researchers are able to apply the collected findings to an entire population.

Types of Quantitative Data

定量数据主要有两种类型,它们是——

There are mainly two types of quantitative data, they are −

Discrete Data

Discrete Data

这些是只能采用特定值的数据,而不是范围。例如,有关人群血型或性别的信息被视为离散数据。

These are data that can only take on certain values, as opposed to a range. For instance, data about the blood type or gender of a population is considered discrete data.

离散定量数据的示例可能是您网站的访问者数量;一天可能有 150 次访问,但不可能有 150.6 次访问。通常,饼状图、条形图和饼图用于表示离散数据。

Example of discrete quantitative data may be the number of visitors to your website; you could have 150 visits in one day, but not 150.6 visits. Usually, tally charts, bar charts, and pie charts are used to represent discrete data.

Characteristics of Discrete Data

Characteristics of Discrete Data

由于总结和计算离散数据很简单,因此它通常用于基本的统计分析。让我们检查一下离散数据的一些其他基本特征——

Since it is simple to summarise and calculate discrete data, it is often utilized in elementary statistical analysis. Let’s examine some other essential characteristics of discrete data −

-

Discrete data is made up of discrete variables that are finite, measurable, countable, and can’t be negative (5, 10, 15, and so on).

-

Simple statistical methods, like bar charts, line charts, and pie charts, make it easy to show and explain discrete data.

-

Data can also be categorical, which means it has a fixed number of data values, like a person’s gender.

-

Data that is both time- and space-bound is spread out in a random way. Discrete distributions make it easier to look at discrete values.

Continuous Data

这些数据可能在一定范围内取值,包括最大值和最小值。最大值和最小值之间的差称为数据范围。例如,你学校学生的身高和体重。这被认为是连续数据。连续数据的表格表示称为频数分布。这些可以利用直方图直观地描述。

These are data that may take values between a certain range, including the greatest and lowest possible. The difference between the greatest and least value is known as the data range. For instance, the height and weight of your school’s children. This is considered continuous data. The tabular representation of continuous data is known as a frequency distribution. These may be depicted visually using histograms.

Characteristics of continuous data

Characteristics of continuous data

另一方面,连续数据可以是数字,或者随时间和日期变化。由于可能的值是无限的,所以此数据类型使用高级的统计分析方法。连续数据的以下重要特征 −

Continuous data, on the other hand, can be either numbers or spread out over time and date. This data type uses advanced statistical analysis methods because there are an infinite number of possible values. The important characteristics about continuous data are −

-

Continuous data changes over time, and at different points in time, it can have different values.

-

Random variables, which may or may not be whole numbers, make up continuous data.

-

Data analysis tools like line graphs, skews, and so on are used to measure continuous data.

-

One type of continuous data analysis that is often used is regression analysis.

Quantitative Data Collection Methods

下面是一些用于收集定量数据的方法和采集方法 −

Below are some approaches and collection methods to collect quantitative data −

-

Surveys and Questionnaires − These types of research are good for getting detailed feedback from users and customers, especially about how people feel about a product, service, or experience.

-

Open-source Datasets − There are a lot of public datasets that can be found online and analysed for free. Researchers sometimes look at data that has already been collected and try to figure out what it means in a way that fits their own research project.

-

Experiments − A common method is an experiment, which usually has a control group and an experimental group. The experiment is set up so that it can be controlled and the conditions can be changed as needed.

-

Sampling − When there are a lot of data points, it may not be possible to survey each person or data point. In this case, quantitative research is done with the help of sampling. Sampling is the process of choosing a sample of data that is representative of the whole. The two types of sampling are Random sampling (also called probability sampling), and non-random sampling.

Types of Data Collection

根据来源,数据收集可以分为两类 −

Data collection can be classified into two types according to the source −

-

Primary Data − These are the data that are acquired for the first time for a particular purpose by an investigator. Primary data are 'pure' in the sense that they have not been subjected to any statistical manipulations and are authentic. Examples of primary data include the Census of India.

-

Secondary Data − These are the data that were initially gathered by a certain entity. This indicates that this kind of data has already been gathered by researchers or investigators and is accessible in either published or unpublished form. This data is impure because statistical computations may have previously been performed on it. For example, Information accessible on the website of the Government of India or the Department of Finance, or in other archives, books, journals, etc.

Big Data

大数据被定义为具有更大数据量的数据,需要克服后勤方面的挑战来处理它们。大数据指的是更大、更复杂的数据集合,特别是来自新型数据源的数据。一些数据集非常庞大,以至于传统的的数据处理软件无法处理它们。然而,这些海量数据可以用来解决以前无法解决的业务难题。

Big data is defined as data with a larger volume and require overcoming logistical challenges to deal with them. Big data refers to bigger, more complicated data collections, particularly from novel data sources. Some data sets are so extensive that conventional data processing software is incapable of handling them. But, these vast quantities of data can be use to solve business challenges that were previously unsolvable.

数据科学是关于如何分析巨量数据并从中获取信息的研究。你可以将大数据和数据科学比作原油和炼油厂。数据科学和大数据源于统计学和传统的数据管理方式,但现在被视为独立的领域。

Data Science is the study of how to analyse huge amount of data and get the information from them. You can compare big data and data science to crude oil and an oil refinery. Data Science and big data grew out of statistics and traditional ways of managing data, but they are now seen as separate fields.

人们常常使用三个 V 来描述大数据的特性 −

People often use the three Vs to describe the characteristics of big data −

-

Volume − How much information is there?

-

Variety − How different are the different kinds of data?

-

Velocity − How fast do new pieces of information get made?

How do we use Data in Data Science?

每条数据都必须经过预处理。这是一系列将原始数据转换为更易理解且有价值的格式以供进一步处理的基本流程。常见流程为−

Every data must undergo pre-processing. This is an essential series of processes that converts raw data into a more comprehensible and valuable format for further processing. Common procedures are −

-

Collect and Store the Dataset

-

Data Cleaning

-

Data Integration

-

Data Transformation

我们将在接下来的章节中详细讨论这些流程。

We will discuss these processes in detail in upcoming chapters.

Data Science - Lifecycle

What is Data Science Lifecycle?

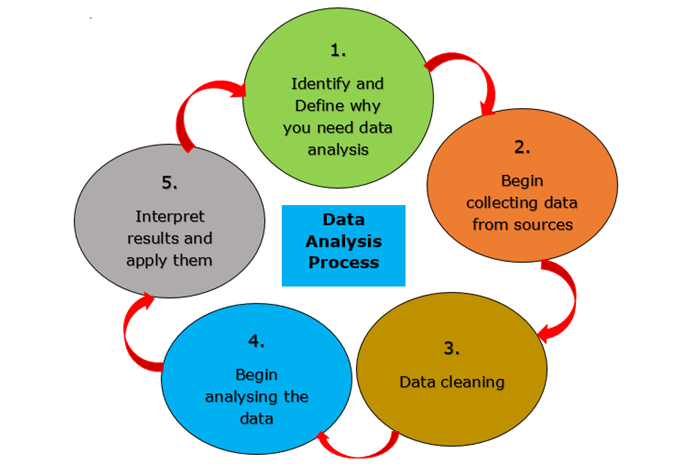

数据科学生命周期是一种寻找数据问题的解决方案的系统方法,它显示了开发、交付/部署和维护数据科学项目所需的步骤。我们可以假设一个通用数据科学生命周期,其中包含一些最重要的常见步骤,如下面的图片所示,但由于每个项目不同,因此某些步骤可能因项目而异,因为并非每个数据科学项目都是以相同的方式构建的

A data science lifecycle is a systematic approach to find a solution for a data problem which shows the steps that are taken to develop, deliver/deploy , and maintain a data science project. We can assume a general data science lifecycle with some of the most important common steps that is shown in the figure given below but some steps may differ from project to project as each project is different so life cycle may differ since not every data science project is built the same way

一种标准数据科学生命周期方法包括使用机器学习算法和统计程序,这些程序会产生更准确的预测模型。数据提取、准备、清理、建模、评估等,是数据科学中最重要的阶段。这种技术在数据科学领域被称为“数据挖掘的跨行业标准程序”。

A standard data science lifecycle approach comprises the use of machine learning algorithms and statistical procedures that result in more accurate prediction models. Data extraction, preparation, cleaning, modelling, assessment, etc., are some of the most important data science stages. This technique is known as "Cross Industry Standard Procedure for Data Mining" in the field of data science.

How many phases are there in the Data Science Life Cycle?

数据科学生命周期主要有六个阶段−

There are mainly six phases in Data Science Life Cycle −

Identifying Problem and Understanding the Business

数据科学生命周期从“为什么?”开始,就像任何其他业务生命周期一样。数据科学过程中最重要的部分之一是找出问题所在。这有助于找到一个明确的目标,可以围绕该目标制定所有其他步骤。简而言之,尽早了解业务目标非常重要,因为它将决定分析的最终目标。

The data science lifecycle starts with "why?" just like any other business lifecycle. One of the most important parts of the data science process is figuring out what the problem is. This helps to find a clear goal around which all the other steps can be planned out. In short, it’s important to know the business goal as earliest because it will determine what the end goal of the analysis will be.

此阶段应评估业务趋势、评估可比分析的案例研究,并研究行业领域。该组将根据可用的员工、设备、时间和技术评估项目的可行性。一旦发现并评估了这些因素,将制定一个初步假设来解决现有环境造成的业务问题。本阶段应−

This phase should evaluate the trends of business, assess case studies of comparable analyses, and research the industry’s domain. The group will evaluate the feasibility of the project given the available employees, equipment, time, and technology. When these factors been discovered and assessed, a preliminary hypothesis will be formulated to address the business issues resulting from the existing environment. This phrase should −

-

Specify the issue that why the problem must be resolved immediately and demands answer.

-

Specify the business project’s potential value.

-

Identify dangers, including ethical concerns, associated with the project.

-

Create and convey a flexible, highly integrated project plan.

Data Collection

数据科学生命周期的下一步是数据收集,这意味着从适当且可靠的来源获取原始数据。收集的数据可以是有序的,也可以是无序的。数据可以从网站日志、社交媒体数据、在线数据存储库中收集,甚至可以使用 API、网络抓取或可能存在于 Excel 或其他来源中的数据从在线来源流式传输数据。

The next step in the data science lifecycle is data collection, which means getting raw data from the appropriate and reliable source. The data that is collected can be either organized or unorganized. The data could be collected from website logs, social media data, online data repositories, and even data that is streamed from online sources using APIs, web scraping, or data that could be in Excel or any other source.

从事这项工作的人员应该了解可用的不同数据集之间的差异,以及组织如何投资其数据。专业人士很难追踪每条数据的来源,以及它是否是最新的。在数据科学项目整个生命周期内,追踪这些信息非常重要,因为它可以帮助验证假设或运行任何其他新实验。

The person doing the job should know the difference between the different data sets that are available and how an organization invests its data. Professionals find it hard to keep track of where each piece of data comes from and whether it is up to date or not. During the whole lifecycle of a data science project, it is important to keep track of this information because it could help test hypotheses or run any other new experiments.

信息可以通过调查或更流行的自动数据收集方法(如互联网 Cookie)收集,互联网 Cookie 是未经分析的数据的主要来源。

The information may be gathered by surveys or the more prevalent method of automated data gathering, such as internet cookies which is the primary source of data that is unanalysed.

我们还可以使用开放源数据集等辅助数据。我们可以从许多可用网站收集数据,例如

We can also use secondary data which is an open-source dataset. There are many available websites from where we can collect data for example

-

Kaggle (https://www.kaggle.com/datasets),

-

Google Public Datasets (https://cloud.google.com/bigquery/public-data/)

Python 中有一些预定义的数据集。让我们从 Python 中导入鸢尾花数据集,并使用它来定义数据科学的阶段。

There are some predefined datasets available in python. Let’s import the Iris dataset from python and use it to define phases of data science.

from sklearn.datasets import load_iris

import pandas as pd

# Load Data

iris = load_iris()

# Create a dataframe

df = pd.DataFrame(iris.data, columns = iris.feature_names)

df['target'] = iris.target

X = iris.dataData Processing

从可靠的来源收集到高质量的数据后,下一步是对其进行处理。数据处理的目的是确保所获取的数据中是否存在任何问题,以便在进入下一阶段之前解决这些问题。如果没有这一步,我们可能会产生错误或不准确的发现。

After collecting high-quality data from reliable sources, next step is to process it. The purpose of data processing is to ensure if there is any problem with the acquired data so that it can be resolved before proceeding to the next phase. Without this step, we may produce mistakes or inaccurate findings.

所获得的数据可能存在若干困难。例如,数据可能有多行或多列缺少若干值。它可能包含若干离群值、不准确的数字、具有不同时区的 timestamp 等。数据可能潜在地存在日期范围问题。在某些国家/地区,日期的格式为 DD/MM/YYYY,而在其他国家/地区,日期的格式为 MM/DD/YYYY。在数据收集过程中可能会出现许多问题,例如,如果从多个温度计中收集数据且其中任何一个出现故障,则可能需要丢弃或重新收集数据。

There may be several difficulties with the obtained data. For instance, the data may have several missing values in multiple rows or columns. It may include several outliers, inaccurate numbers, timestamps with varying time zones, etc. The data may potentially have problems with date ranges. In certain nations, the date is formatted as DD/MM/YYYY, and in others, it is written as MM/DD/YYYY. During the data collecting process numerous problems can occur, for instance, if data is gathered from many thermometers and any of them are defective, the data may need to be discarded or recollected.

在此阶段,必须解决与数据相关的各种问题。其中一些问题有多种解决方案,例如,如果数据包含缺失值,我们可以用零或列的平均值替换它们。不过,如果该列缺少大量值,则最好完全移除该列,因为它拥有太少数据而无法用于求解问题的数据科学生命周期的方法。

At this phase, various concerns with the data must be resolved. Several of these problems have multiple solutions, for example, if the data includes missing values, we can either replace them with zero or the column’s mean value. However, if the column is missing a large number of values, it may be preferable to remove the column completely since it has so little data that it cannot be used in our data science life cycle method to solve the issue.

当时区混乱时,我们无法使用这些列中的数据,可能必须将其移除,直至我们能够定义提供的时间戳中使用的时区。如果知道收集每个时间戳所用的时区,我们可能会将所有时间戳数据转换为某个时区。此种方式有许多策略可以解决所获取数据中可能存在的各种问题。

When the time zones are all mixed up, we cannot utilize the data in those columns and may have to remove them until we can define the time zones used in the supplied timestamps. If we know the time zones in which each timestamp was gathered, we may convert all timestamp data to a certain time zone. In this manner, there are a number of strategies to address concerns that may exist in the obtained data.

接下来我们将使用 Python 访问数据,然后将其存储在数据框内。

We will access the data and then store it in a dataframe using python.

from sklearn.datasets import load_iris

import pandas as pd

import numpy as np

# Load Data

iris = load_iris()

# Create a dataframe

df = pd.DataFrame(iris.data, columns = iris.feature_names)

df['target'] = iris.target

X = iris.data机器学习模型的所有数据都必须以数字表示。这意味着,如果数据集包含分类数据,则必须将其转换为数字值,然后才能执行模型。因此,我们将实施标签编码。

All data must be in numeric representation for machine learning models. This implies that if a dataset includes categorical data, it must be converted to numeric values before the model can be executed. So we will be implementing label encoding.

Label Encoding

Label Encoding

species = []

for i in range(len(df['target'])):

if df['target'][i] == 0:

species.append("setosa")

elif df['target'][i] == 1:

species.append('versicolor')

else:

species.append('virginica')

df['species'] = species

labels = np.asarray(df.species)

df.sample(10)

labels = np.asarray(df.species)

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

le.fit(labels)

labels = le.transform(labels)

df_selected1 = df.drop(['sepal length (cm)', 'sepal width (cm)', "species"], axis=1)Data Analysis

数据分析 探索性数据分析 (EDA) 是一组用于分析数据的可视化技术。采用此方法,我们可能会获取有关数据统计摘要的具体详细信息。此外,我们将能够处理重复数字、离群值,并在集合内找出趋势或模式。

Data analysis Exploratory Data Analysis (EDA) is a set of visual techniques for analysing data. With this method, we may get specific details on the statistical summary of the data. Also, we will be able to deal with duplicate numbers, outliers, and identify trends or patterns within the collection.

在此阶段,我们尝试对所获取和已处理的数据有更深入的理解。我们应用统计和分析技术对数据做出结论,并确定我们数据集中的多列之间的联系。借助图片、图表、流程图、绘图等,我们可以使用可视化来更好地理解和描述数据。

At this phase, we attempt to get a better understanding of the acquired and processed data. We apply statistical and analytical techniques to make conclusions about the data and determine the link between several columns in our dataset. Using pictures, graphs, charts, plots, etc., we may use visualisations to better comprehend and describe the data.

专业人员使用均值和中位数等数据统计技术来更好地理解数据。他们还使用直方图、频谱分析和总体分布来可视化数据并评估其分布模式。数据将根据问题进行分析。

Professionals use data statistical techniques such as the mean and median to better comprehend the data. Using histograms, spectrum analysis, and population distribution, they also visualise data and evaluate its distribution patterns. The data will be analysed based on the problems.

Example

以下代码用于检查数据集中是否存在任何空值:

Below code is used to check if there are any null values in the dataset −

df.isnull().sum()Output

sepal length (cm) 0

sepal width (cm) 0

petal length (cm) 0

petal width (cm) 0

target 0

species 0

dtype: int64从上述输出中我们可以得出结论,数据集中没有空值,因为列中所有空值的总和为 0。

From the above output we can conclude that there are no null values in the dataset as the sum of all the null values in the column is 0.

我们将使用 shape 参数来检查数据集的形状(行、列)。

We will be using shape parameter to check the shape (rows, columns) of the dataset −

Example

df.shapeOutput

(150, 5)接下来我们将使用 info() 检查列及其数据类型:

Now we will use info() to check the columns and their data types −

Example

df.info()Output

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 150 entries, 0 to 149

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 sepal length (cm) 150 non-null float64

1 sepal width (cm) 150 non-null float64

2 petal length (cm) 150 non-null float64

3 petal width (cm) 150 non-null float64

4 target 150 non-null int64

dtypes: float64(4), int64(1)

memory usage: 6.0 KB只有一列包含分类数据,而其他列包含非空数字值。

Only one column contains category data, whereas the other columns include non-Null numeric values.

现在我们将在数据上使用 describe()。describe() 方法对数据集执行基础统计计算,例如极值、数据点数量、标准偏差等。任何缺失值或 NaN 值都会立即被忽略。describe() 方法准确描绘了数据的分布。

Now we will use describe() on the data. The describe() method performs fundamental statistical calculations to a dataset, such as extreme values, the number of data points, standard deviation, etc. Any missing or NaN values are immediately disregarded. The describe() method accurately depicts the distribution of data.

Example

df.describe()Output

Data Visualization

Target column - 我们的目标列将是 Species 列,因为我们最终只需要基于物种的结果即可。

Target column − Our target column will be the Species column since we will only want results based on species in the end.

我们将使用 Matplotlib 和 seaborn 库进行数据可视化。

Matplotlib and seaborn library will be used for data visualization.

以下是物种计量图−

Below is the species countplot −

Example

import seaborn as sns

import matplotlib.pyplot as plt

sns.countplot(x='species', data=df, )

plt.show()Output

数据科学中还有许多其他可视化图。要了解更多信息,请参阅 https://www.tutorialspoint.com/machine_learning_with_python

There are many other visualization plots in Data Science. To know more about them refer https://www.tutorialspoint.com/machine_learning_with_python

Data Modeling

数据建模是数据科学中最重要的方面之一,有时被称为数据分析的核心。模型的预期输出应从已准备和分析的数据中得出。在达到指定标准之前,将选择并构建执行数据模型所需的模型。

Data Modeling is one of the most important aspects of data science and is sometimes referred to as the core of data analysis. The intended output of a model should be derived from prepared and analysed data. The environment required to execute the data model will be chosen and constructed, before achieving the specified criteria.

在这个阶段,我们将为生产相关任务开发数据集以对模型进行训练和测试。它还涉及选择正确的模式类型并确定问题是否涉及分类、回归或聚类。在分析模型类型后,我们必须选择适当的实现算法。必须仔细执行,因为从提供的数据中提取相关见解至关重要。

At this phase, we develop datasets for training and testing the model for production-related tasks. It also involves selecting the correct mode type and determining if the problem involves classification, regression, or clustering. After analysing the model type, we must choose the appropriate implementation algorithms. It must be performed with care, as it is crucial to extract the relevant insights from the provided data.

机器学习在这里发挥了作用。机器学习基本上分为分类、回归或聚类模型,每个模型都有一些算法应用于数据集以获取相关信息。这些模型用于此阶段。我们将在机器学习章节详细讨论这些模型。

Here machine learning comes in picture. Machine learning is basically divided into classification, regression, or clustering models and each model have some algorithms which is applied on the dataset to get the relevant information. These models are used in this phase. We will discuss these models in detail in the machine learning chapter.

Model Deployment

我们已经到达数据科学生命周期的最后阶段。经过详细的审查过程后,该模型终于可以按照所需的格式在所选渠道中部署。请注意,机器学习模型只有在生产中部署后才有用。一般来说,这些模型与产品和应用程序相关联并集成。

We have reached the final stage of the data science lifecycle. The model is finally ready to be deployed in the desired format and chosen channel after a detailed review process. Note that the machine learning model has no utility unless it is deployed in the production. Generally speaking, these models are associated and integrated with products and applications.

模型部署包含建立将模型部署到市场消费者或另一个系统所需的交付方法。机器学习模型也在设备上实施,并获得接受和吸引力。根据项目的复杂性,此阶段可能从 Tableau 仪表板的基本模型输出到拥有数百万用户的复杂云部署。

Model Deployment contains the establishment of a delivery method necessary to deploy the model to market consumers or to another system. Machine learning models are also being implemented on devices and gaining acceptance and appeal. Depending on the complexity of the project, this stage might range from a basic model output on a Tableau Dashboard to a complicated cloud-based deployment with millions of users.

Who are all involved in Data Science lifecycle?

数据正在从个人层面到组织层面,在大量的服务器和数据仓库中生成、收集和存储。但是您将如何访问这个庞大的数据存储库?这就是数据科学家介入的地方,因为他们专门从非结构化文本和统计数据中提取见解和模式。

Data is being generated, collected, and stored on voluminous servers and data warehouses from the individual level to the organisational level. But how will you access this massive data repository? This is where the data scientist comes in, since he or she is a specialist in extracting insights and patterns from unstructured text and statistics.

下文,我们将介绍参加数据科学生命周期的许多数据科学团队工作简介。

Below, we present the many job profiles of the data science team participating in the data science lifecycle.

S.No |

Job Profile & Role |

1 |

*Business Analyst*Understanding business requirements and find the right target customers. |

2 |

*Data Analyst*Format and clean the raw data, interpret and visualise them to perform the analysis and provide the technical summary of the same |

3 |

*Data Scientists*Improve quality of machine learning models. |

4 |

*Data Engineer*They are in charge of gathering data from social networks, websites, blogs, and other internal and external web sources ready for further analysis. |

5 |

*Data Architect*Connect, centralise, protect, and keep up with the organization’s data sources. |

6 |

*Machine Learning Engineer*Design and implement machine learning-related algorithms and applications. |

Data Science - Prerequisites

你需要具备多种技术和非技术技能才能成为一名成功的数据科学家。具备某些技能对于成为一名知识渊博的数据科学家至关重要,而另一些技能只是为了让数据科学家的事情变得更容易。不同的工作角色决定了你必须具备的特定技能熟练程度。

You need to have several technical and non-technical skills to become a successful Data Scientist. Some of the skills are essential to have to become a well-versed data scientist while some for just for making thing things easier for a data scientist. Different job roles determine the level of skill-specific proficiency you need to possess.

以下列出了一些成为数据科学家所需具备的技能。

Given below are some skills you will require to become a data scientist.

Technical Skills

Python

数据科学家大量使用 Python,因为它是最流行的编程语言之一,易于学习,并拥有可用于数据操作和数据分析的大型库。因为它是一种灵活的语言,所以它可以在数据科学的所有阶段中使用,例如数据挖掘或运行应用程序。Python 拥有一个庞大的开源库,其中包含强大的数据科学库,如 Numpy、Pandas、Matplotlib、PyTorch、Keras、Scikit Learn、Seaborn 等。这些库有助于完成不同的数据科学任务,例如读取大型数据集,绘制和可视化数据和相关性,训练和拟合机器学习模型以适应你的数据,评估模型的性能等。

Data Scientists use Python a lot because it is one of the most popular programming languages, easy to learn and has extensive libraries that can be used for data manipulation and data analysis. Since it is a flexible language, it can be used in all stages of Data Science, such as data mining or running applications. Python has a huge open-source library with powerful Data Science libraries like Numpy, Pandas, Matplotlib, PyTorch, Keras, Scikit Learn, Seaborn, etc. These libraries help with different Data Science tasks, such as reading large datasets, plotting and visualizing data and correlations, training and fitting machine learning models to your data, evaluating the performance of the model, etc.

SQL

SQL 是在开始数据科学之前需要的另一个基本条件。与其他编程语言相比,SQL 相对简单,但要成为一名数据科学家是必需的。此编程语言用于管理和查询关系数据库存储的数据。我们可以使用 SQL 检索、插入、更新和删除数据。要从数据中提取见解,能够创建复杂的 SQL 查询(包括连接、分组、具有等)至关重要。连接方法使你能够同时查询多个表格。SQL 还可以执行分析操作和转换数据库结构。

SQL is an additional essential prerequisite before getting started with Data Science. SQL is relatively simple compared to other programming languages, but is required to become a Data Scientist. This programming language is used to manage and query relational database-stored data. We can retrieve, insert, update, and remove data with SQL. To extract insights from data, it is crucial to be able to create complicated SQL queries that include joins, group by, having, etc. The join method enables you to query many tables simultaneously. SQL also enables the execution of analytical operations and the transformation of database structures.

R

R 是一种高级语言,用于制作复杂的统计模型。R 还允许你使用阵列、矩阵和向量。R 以其图形库而闻名,使用户能够绘制精美的图表并使图表易于理解。

R is an advanced language that is used to make complex models of statistics. R also lets you work with arrays, matrices, and vectors. R is well-known for its graphical libraries, which let users draw beautiful graphs and make them easy to understand.

借助 R Shiny,程序员可以使用 R 制作 Web 应用程序,用于将可视化元素嵌入到网页中,并为用户提供大量与其交互的方式。此外,数据提取是数据科学的一个关键部分。R 允许你将 R 代码连接到数据库管理系统。

With R Shiny, programmers can make web applications using R, which is used to embed visualizations in web pages and gives users a lot of ways to interact with them. Also, data extraction is a key part of the science of data. R lets you connect your R code to database management systems.

R 还为你提供了更高级数据分析的多种选择,例如构建预测模型、机器学习算法等。R 还有许多用于处理图像的软件包。

R also gives you a number of options for more advanced data analysis, such as building prediction models, machine learning algorithms, etc. R also has a number of packages for processing images.

Statistics

在数据科学中,高度依赖统计才能存储和翻译数据模式用于预测的高级机器算法。数据科学家利用统计执行数据收集、评估、分析和从数据中推论结论,以及使用相关的量化数学模型和变量。数据科学家担任程序员、研究员和商务主管等职位,所有这些学科都具有统计基础。统计在数据科学中的重要性与编程语言相当。

In data science, advanced machine learning algorithms that stores and translate data patterns for prediction rely heavily on statistics. Data scientists utilize statistics to collect, assess, analyze, and derive conclusions from data, as well as to apply relevant quantitative mathematical models and variables. Data scientists work as programmers, researchers, and executives in business, among other roles, all of these disciplines have a statistical foundation. The importance of statistics in data science is comparable to that of programming languages.

Hadoop

数据科学家在海量数据上执行操作,但有时系统的内存无法处理这些海量数据。那么如何在如此海量的数据上执行数据处理?这里 Hadoop 就发挥了作用。它可用于快速分割数据并将其传输至多个服务器以进行数据处理和其他操作(如筛选)。尽管 Hadoop 基于分布式计算概念,但许多公司要求数据科学家基本了解分布式系统原则(如 Pig、Hive、MapReduce 等)。许多公司已经开始使用 Hadoop 即服务(HaaS),这是云中 Hadoop 的另一个名称,这样数据科学家就不需要了解 Hadoop 的内部工作原理。

Data scientists perform operations on enormous amount of data but sometimes the memory of the system is not able to carry out processing on these huge amount of data. So how data processing will be performed on such huge amount of data? Here Hadoop comes in the picture. It is used to rapidly divide and transfer data to numerous servers for data processing and other actions such as filtering. While Hadoop is based on the concept of Distributed Computing, several firms require that Data Scientists have a fundamental understanding of Distributed System principles such as Pig, Hive, MapReduce, etc. Several firms have begun to use Hadoop-as-a-Service (HaaS), another name for Hadoop in the cloud, so that Data Scientists do not need to understand Hadoop’s inner workings.

Spark

Spark 是一个用于大数据计算的框架,它在数据科学领域中越来越流行。Hadoop 从磁盘读取数据并写入数据,而 Spark 计算结果在系统内存中,使得它与 Hadoop 相比更容易且更快。Apache Spark 的功能是加快复杂算法的速度,其专门用于数据科学。如果数据集很大,它会分布式处理数据,这会节省大量时间。使用 Apache Spark 的主要原因在于其速度和为运行数据科学任务和流程提供的平台。Spark 可以在一台计算机或多个计算机集群上运行,使得使用 Spark 非常方便。

Spark is a framework for big data computation like Hadoop and has gained some popularity in Data Science world. Hadoop reads data from the disk and writes data to the disk while on the other hand Spark Calculates the computation results in the system memory, making it comparatively easy and faster than Hadoop. The function of Apache Spark is to facilitate the speed of the complex algorithms and it is specially designed for the data science. If the dataset is huge then it distributes data processing which saves a lot of time. The main reason of using apache spark is because of its speed and the platform it provides to run data science tasks and processes. It is possible to run Spark on a single machine or a cluster of machines which makes it convenient to work with.

Machine Learning

机器学习是数据科学的关键组成部分。机器学习算法是分析海量数据的有效方式。它可以帮助自动化各种相关数据科学操作。但是,对机器学习原理的深入了解并非在行业内开始职业生涯的必需条件。大多数数据科学家缺乏机器学习技能。只有极少数数据科学家对推荐引擎、对抗性学习、强化学习、自然语言处理、异常值检测、时序分析、计算机视觉、生存分析等高级主题拥有广泛的知识和专门知识。因此,这些能力将帮助你在数据科学职业中脱颖而出。

Machine Learning is crucial component of Data Science. Machine Learning algorithms are an effective method for analysing massive volumes of data. It may assist in automating a variety of Data Science-related operations. Nevertheless, an in-depth understanding of Machine Learning principles is not required to begin a career in this industry. The majority of Data Scientists lack skills in Machine Learning. Just a tiny fraction of Data Scientists has extensive knowledge and expertise in advanced topics such as Recommendation Engines, Adversarial Learning, Reinforcement Learning, Natural Language Processing, Outlier Detection, Time Series Analysis, Computer Vision, Survival Analysis, etc. These competencies will consequently help you stand out in a Data Science profession.

Non-Technical Skills

Understanding of Business Domain

数据科学家对特定业务领域或涉猎领域的了解越深入,就越容易对该特定领域的数据进行分析。

More understanding one has for a particular business area or domain, easier it will be for a data scientist to do the analysis on the data from that particular domain.

Understanding of Data

数据科学涉及所有数据,因此了解数据非常重要,例如什么是数据、如何存储数据、表、行和列的知识。

Data Science is all about data so it is very important to have an understanding of data that what is data, how data is stored, knowledge of tables, rows and columns.

Critical and Logical Thinking

批判性思维是在理解和明确了解思想如何匹配过程中明确、合乎逻辑地思考的能力。在数据科学中,您需要具备批判性思维,以便获得有用的见解并改善业务运营。批判性思维可能是数据科学中最重要的技能之一。它使他们能够更深入地挖掘信息并找出最重要的事情。

Critical thinking is the ability to think clearly and logically while figuring out and understanding how ideas fit together. In data science, you need to be able to think critically to get useful insights and improve business operations. Critical thinking is probably one of the most important skills in data science. It makes it easier for them to dig deeper into information and find the most important things.

Product Understanding

设计模型并非数据科学家的全部工作。数据科学家必须提出可用于提高产品质量的见解。通过系统方法,如果专业人士了解整个产品,他们可以快速加速。他们可以帮助模型启动(引导)并改善特性工程。此技能还可以帮助他们通过揭示以前可能没有想到过的有关产品的想法和见解来改善他们的讲故事能力。

Designing models isn’t the entire job of a data scientist. Data scientists have to come up with insights that can be used to improve the quality of products. With a systematic approach, professionals can accelerate quickly if they understand the whole product. They can help models get started (bootstrap) and improve feature engineering. This skill also helps them improve their storytelling by revealing thoughts and insights about products that they may not have thought of before.

Adaptability

在现代人才获取流程中,数据科学家最抢手的软技能之一是适应能力。由于新技术正在更快地被制造和使用,因此专业人士必须快速学会如何使用它们。作为一名数据科学家,您必须跟上不断变化的业务趋势并能够适应。

One of the most sought-after soft skills for data scientists in the modern talent acquisition process is the ability to adapt. Because new technologies are being made and used more quickly, professionals have to quickly learn how to use them. As a data scientist, you have to keep up with changing business trends and be able to adapt.

Data Science - Applications

数据科学涉及不同的学科,如数学和统计建模、从其来源提取数据和应用数据可视化技术。它还涉及处理大数据技术以收集结构化和非结构化数据。下面,我们将看到一些数据科学的应用——

Data Science involves different disciplines like mathematical and statistical modelling, extracting data from its source and applying data visualization techniques. It also involves handling big data technologies to gather both structured and unstructured data. Below, we will see some applications of data science −

Gaming Industry

通过建立社交媒体影响力,体育组织应对许多问题。游戏公司 Zynga 推出了社交媒体游戏,例如 Zynga Poker、Farmville、Chess with Friends、Speed Guess Something 和 Words with Friends。这产生了大量的用户连接和海量数据。

By establishing a presence on social media, sports organizations deal with a number of issues. Zynga, a gaming corporation, has produced social media games like Zynga Poker, Farmville, Chess with Friends, Speed Guess Something, and Words with Friends. This has generated many user connections and large data volumes.

这就是游戏行业中数据科学的必要性所在,以便使用从所有社交网络中获取的玩家数据。数据分析为玩家提供了一种迷人、创新的娱乐方式,让他们始终领先于竞争对手!数据科学最有趣的应用之一是在游戏制作的功能和程序中。

Here comes the necessity for data science within the game business in order to use the data acquired from players across all social networks. Data analysis provides a captivating, innovative diversion for players to keep ahead of the competition! One of the most interesting applications of data science is inside the features and procedures of game creation.

Health Care

数据科学在医疗保健领域发挥着重要作用。数据科学家的责任是将所有数据科学方法集成到医疗保健软件中。数据科学家帮助从数据中收集有用的见解,以便创建预测模型。数据科学家在医疗保健领域的总体职责如下——

Data Science plays an important role in the field of healthcare. A Data Scientist’s responsibility is to integrate all Data Science methodologies into healthcare software. The Data Scientist helps in collecting useful insights from the data in order to create prediction models. The overall responsibilities of a Data Scientist in the field of healthcare are as follows −

-

Collecting information from patients

-

Analyzing hospitals' requirements

-

Organizing and classifying the data for usage

-

Implementing Data Analytics with diverse methods

-

Using algorithms to extract insights from data.

-

Developing predictive models with the development staff.

以下是数据科学的一些应用:

Given below are some of the applications of data science −

Medical Image Analysis

数据科学通过对扫描图像执行图像分析,帮助确定人体的异常,从而帮助医生制定适当的治疗计划。这些图片检查包括 X 射线、超声波、MRI(磁共振成像)、CT 扫描等。医生可以通过研究这些测试照片获得重要信息,从而为患者提供更好的护理。

Data Science helps to determine the abnormalities in a human body by performing image analysis on scanned images, hence assisting physicians in developing an appropriate treatment plan. These picture examinations include X-ray, sonography, MRI (Magnetic Resonance Imaging), and CT scan, among others. Doctors are able to give patients with better care by gaining vital information from the study of these test photos.

Predictive Analysis

使用数据科学开发的预测分析模型可以预测患者的病情。此外,它还有助于制定患者适当治疗的策略。预测分析是数据科学中一个非常重要的工具,在医疗保健业务中发挥着重要作用。

The condition of a patient is predicted by the predictive analytics model developed using Data Science. In addition, it facilitates the development of strategies for the patient’s suitable treatment. Predictive analytics is a highly important tool of data science that plays a significant part in the healthcare business.

Image Recognition

图像识别是一种图像处理技术,可以识别图像中的所有内容,包括人物、图案、徽标、物品、位置、颜色和形式。

Image recognition is a technique of image processing that identifies everything in an image, including individuals, patterns, logos, items, locations, colors, and forms.

数据科学技术已经开始识别人的面部,并将其与数据库中的所有图像进行匹配。此外,带有摄像头的手机正在生成无限数量的数字图像和视频。大量数字数据正被企业用于为客户提供更优质、更便捷的服务。通常,人工智能的面部识别系统分析所有面部特征,并将其与数据库进行比较以查找匹配项。

Data Science techniques have begun to recognize the human face and match it with all the images in their database. In addition, mobile phones with cameras are generating infinite number of digital images and videos. This vast amount of digital data is being utilized by businesses to provide customers with superior and more convenient services. Generally, the facial recognition system of AI analyses all facial characteristics and compares them to its database to find a match.

例如,iPhone 中面容锁功能中的面部检测。

For example, Facial detection in Face lock feature in iPhone.

Recommendation systems

随着在线购物的日益普及,电子商务平台能够捕捉到用户的购物偏好以及市场上各种产品的表现。这导致创建推荐系统,该系统创建预测购物者需求的模型,并展示购物者最有可能购买的产品。诸如亚马逊和 Netflix 等公司使用推荐系统,以便帮助其用户找到他们正在寻找的正确电影或产品。

As online shopping becomes more prevalent, the e-commerce platforms are able to capture users shopping preferences as well as the performance of various products in the market. This leads to creation of recommendation systems, which create models predicting the shoppers needs and show the products the shopper is most likely to buy. Companies like Amazon and Netflix use recommendation system so that they can help their user to find the correct movie or product they are looking for.

Airline Routing Planning

数据科学在航空业中呈现出众多机会。高空飞行飞机提供了大量有关发动机系统、燃油效率、天气、乘客信息等方面的数据。当该行业使用更多配备了传感器和其他数据收集技术的现代化飞机时,将创建更多数据。如果使用得当,这些数据可能会为该行业提供新的可能性。

Data Science in the Airline Industry presents numerous opportunities. High-flying aircraft provide an enormous amount of data about engine systems, fuel efficiency, weather, passenger information, etc. More data will be created when more modern aircraft equipped with sensors and other data collection technologies are used by the industry. If appropriately used, this data may provide new possibilities for the sector.

它还有助于决定是直接降落在目的地还是在中途停靠,例如航班可以有直达航线。

It also helps to decide whether to directly land at the destination or take a halt in between like a flight can have a direct route.

Finance

数据科学在银行业的重要性与相关性与数据科学在企业决策的其他领域中的重要性相关。金融业的数据科学专业人士通过帮助他们开发工具和仪表盘来增强投资流程,为公司内的相关团队,特别是投资和财务团队提供支持和帮助。

The importance and relevance of data science in the banking sector is comparable to that of data science in other areas of corporate decision-making. Professionals in data science for finance give support and assistance to relevant teams within the company, particularly the investment and financial team, by assisting them in the development of tools and dashboards to enhance the investment process.

Improvement in Health Care services

医疗保健行业处理各种数据,这些数据可分为技术数据、财务数据、患者信息、药物信息和法律法规。所有这些数据都需要以协调的方式进行分析,以产生见解,从而为医疗保健提供者和护理接受者节省成本,同时保持法律合规性。

The health care industry deals with a variety of data which can be classified into technical data, financial data, patient information, drug information and legal rules. All this data need to be analyzed in a coordinated manner to produce insights that will save cost, both for the health care provider and care receiver, while remaining legally compliant.

Computer Vision

计算机识别图像的进步涉及处理来自同一类别多个对象的成组图像数据。例如,面部识别。对这些数据集进行建模,并创建算法将模型应用于更新的图像(测试数据集)以获得满意的结果。处理这些海量数据集和创建模型需要数据科学中使用的各种工具。

The advancement in recognizing an image by a computer involves processing large sets of image data from multiple objects of same category. For example, Face recognition. These data sets are modelled, and algorithms are created to apply the model to newer images (testing dataset) to get a satisfactory result. Processing of these huge data sets and creation of models need various tools used in Data Science.

Efficient Management of Energy

随着能源消耗需求的增加,能源生产公司需要更有效地管理能源生产和分配的各个阶段。这包括优化生产方法、存储和分配机制,以及研究客户的消费模式。链接来自所有这些来源的数据并得出见解似乎是一项艰巨的任务。通过使用数据科学工具可以更轻松地实现这一点。

As the demand for energy consumption rises, the energy producing companies need to manage the various phases of the energy production and distribution more efficiently. This involves optimizing the production methods, the storage and distribution mechanisms as well as studying the customers’ consumption patterns. Linking the data from all these sources and deriving insight seems a daunting task. This is made easier by using the tools of data science.

Internet Search

多个搜索引擎使用数据科学来了解用户行为和搜索模式。这些搜索引擎使用多种数据科学方法为每个用户提供最相关的搜索结果。随着时间的推移,Google、Yahoo、Bing 等搜索引擎在几秒钟内回复搜索的能力越来越强。

Several search engines use data science to understand user behaviour and search patterns. These search engines use diverse data science approaches to give each user with the most relevant search results. Search engines such as Google, Yahoo, Bing, etc. are becoming increasingly competent at replying to searches in seconds as time passes.

Speech Recognition

Google 的语音助手、Apple 的 Siri 和 Microsoft 的 Cortana 都使用大型数据集,并由数据科学和自然语言处理 (NLP) 算法提供支持。随着更多数据的分析,语音识别软件得到改进,对人类本性的理解也得到了加深,这得益于数据科学的应用。

Google’s Voice Assistant, Apple’s Siri, and Microsoft’s Cortana all utilise large datasets and are powered by data science and natural language processing (NLP) algorithms. Speech recognition software improves and gains a deeper understanding of human nature due to the application of data science as more data is analysed.

Education

当世界经历 COVID-19 流行病时,大多数学生总是随身携带电脑。印度教育体系一直使用在线课程、作业和考试的电子提交等。对我们大多数人来说,做任何事情“在线”仍然具有挑战性。技术和当代已经发生了变革。因此,随着数据科学进入我们的教育系统,它成为教育中比以往任何时候都至关重要。

While the world experienced the COVID-19 epidemic, the majority of students were always carrying their computers. Online Courses, E-Submissions of assignments and examinations, etc., have been used by the Indian education system. For the majority of us, doing everything "online" remains challenging. Technology and contemporary times have undergone a metamorphosis. As a result, Data Science in education is more crucial than ever as it enters our educational system.

现在,讲师和学生在日常互动中的表现通过各种平台进行记录,课堂参与度和其他因素正在接受评估。因此,在线课程数量的增加提升了教育数据深度的价值。

Now, instructors’ and students' everyday interactions are being recorded through a variety of platforms, and class participation and other factors are being evaluated. As a result, the rising quantity of online courses has increased the value of Educational data’s depth.

Data Science - Machine Learning

机器学习使机器能够从数据中自动学习、从经验中提高性能并预测事物,而无需明确编程。机器学习主要涉及开发算法,让计算机能够自行从数据和过去的经验中学习。机器学习一词最早由阿瑟·塞缪尔在 1959 年提出。

Machine learning enables a machine to automatically learn from data, improve performance from experiences, and predict things without being explicitly programmed. Machine Learning is mainly concerned with the development of algorithms which allow a computer to learn from the data and past experiences on their own. The term machine learning was first introduced by Arthur Samuel in 1959.

数据科学是一门从数据中获取有用见解的科学,以便获取最关键和最相关的的信息来源。并在给定可靠数据流的情况下,使用机器学习生成预测。

Data Science is the science of gaining useful insights from data in order to get the most crucial and relevant information source. And given a dependable stream of data, generating predictions using machine learning.

数据科学和机器学习是计算机科学的子领域,重点在于分析和利用大量数据,以改进产品、服务、基础设施系统等的开发和向市场推出这些产品的流程。

Data Science and machine learning are subfields of computer science that focus on analyzing and making use of large amounts of data to improve the processes by which products, services, infrastructural systems, and more are developed and introduced to the market.

两者之间的关系类似于正方形是矩形,但矩形不是正方形。数据科学是包罗万象的矩形,而机器学习则是正方形,是它自己的实体。它们都是数据科学家在其工作中常用的,并且越来越受到几乎所有企业的接受。

The two relate to each other in a similar manner that squares are rectangles, but rectangles are not squares. Data Science is the all-encompassing rectangle, while machine learning is a square that is its own entity. They are both commonly employed by data scientists in their job and are increasingly being accepted by practically every business.

What is Machine Learning?

机器学习 (ML) 是一种算法,它让软件能够更准确地预测未来会发生什么,而无需专门编程来执行此操作。机器学习的基本思想是制定算法,让其可以将数据作为输入,并使用统计分析来预测输出,并且随着新数据的出现,还会更新输出。

Machine learning (ML) is a type of algorithm that lets software get more accurate at predicting what will happen in future without being specifically programmed to do so. The basic idea behind machine learning is to make algorithms that can take data as input and use statistical analysis to predict an output while also updating outputs as new data becomes available.

机器学习是使用算法在数据中查找模式,然后预测这些模式在未来如何变化的人工智能的一部分。这使工程师能够使用统计分析来查找数据中的模式。

Machine learning is a part of artificial intelligence that uses algorithms to find patterns in data and then predict how those patterns will change in the future. This lets engineers use statistical analysis to look for patterns in the data.

Facebook、Twitter、Instagram、YouTube 和 TikTok 基于你过去的行为收集有关其用户的信息,它可以猜测你的兴趣和要求,并推荐适合你需要的产品、服务或文章。

Facebook, Twitter, Instagram, YouTube, and TikTok collect information about their users, based on what you’ve done in the past, it can guess your interests and requirements and suggest products, services, or articles that fit your needs.

机器学习是一组工具和概念,用于数据科学,但它们也出现在其他领域。数据科学家通常在他们的工作中使用机器学习,以帮助他们更快地获取更多信息或找出趋势。

Machine learning is a set of tools and concepts that are used in data science, but they also show up in other fields. Data scientists often use machine learning in their work to help them get more information faster or figure out trends.

Types of Machine Learning

机器学习可以分为三种类型的算法——

Machine learning can be classified into three types of algorithms −

-

Supervised learning

-

Unsupervised learning

-

Reinforcement learning

Supervised Learning

监督式学习是一种机器学习和人工智能。它也被称为“监督式机器学习”。它的定义是它使用标记数据集来训练算法如何正确分类数据或预测结果。当数据被放入模型时,其权重会发生变化,直到模型正确合适。这是交叉验证过程的一部分。监督式学习帮助组织为广泛的现实世界问题找到大规模解决方案,例如像 Gmail 中将垃圾邮件分类到与收件箱分开的文件夹一样,我们有一个垃圾邮件文件夹。

Supervised learning is a type of machine learning and artificial intelligence. It is also called "supervised machine learning." It is defined by the fact that it uses labelled datasets to train algorithms how to correctly classify data or predict outcomes. As data is put into the model, its weights are changed until the model fits correctly. This is part of the cross validation process. Supervised learning helps organisations find large-scale solutions to a wide range of real-world problems, like classifying spam in a separate folder from your inbox like in Gmail we have a spam folder.

Supervised Learning Algorithms

一些监督式学习算法有——

Some supervised learning algorithms are −

-

Naive Bayes − Naive Bayes is a classification algoritm that is based on the Bayes Theorem’s principle of class conditional independence. This means that the presence of one feature doesn’t change the likelihood of another feature, and that each predictor has the same effect on the result/outcome.

-

Linear Regression − Linear regression is used to find how a dependent variable is related to one or more independent variables and to make predictions about what will happen in the future. Simple linear regression is when there is only one independent variable and one dependent variable.

-

Logistic Regression − When the dependent variables are continuous, linear regression is used. When the dependent variables are categorical, like "true" or "false" or "yes" or "no," logistic regression is used. Both linear and logistic regression seek to figure out the relationships between the data inputs. However, logistic regression is mostly used to solve binary classification problems, like figuring out if a particular mail is a spam or not.

-

Support Vector Machines(SVM) − A support vector machine is a popular model for supervised learning developed by Vladimir Vapnik. It can be used to both classify and predict data. So, it is usually used to solve classification problems by making a hyperplane where the distance between two groups of data points is the greatest. This line is called the "decision boundary" because it divides the groups of data points (for example, oranges and apples) on either side of the plane.

-

K-nearest Neighbour − The KNN algorithm, which is also called the "k-nearest neighbour" algorithm, groups data points based on how close they are to and related to other data points. This algorithm works on the idea that data points that are similar can be found close to each other. So, it tries to figure out how far apart the data points are, using Euclidean distance and then assigns a category based on the most common or average category. However, as the size of the test dataset grows, the processing time increases, making it less useful for classification tasks.

-

Random Forest − Random forest is another supervised machine learning algorithm that is flexible and can be used for both classification and regression. The "forest" is a group of decision trees that are not correlated to each other. These trees are then combined to reduce variation and make more accurate data predictions.

Unsupervised Learning

无监督学习(也称为无监督机器学习)使用机器学习算法查看未标记数据集并将其分组在一起。这些程序可以找到隐藏的模式或数据组。它查找信息中相似性和差异性的能力使其非常适合探索性数据分析、交叉销售策略、客户细分和图像识别。

Unsupervised learning, also called unsupervised machine learning, uses machine learning algorithms to look at unlabelled datasets and group them together. These programmes find hidden patterns or groups of data. Its ability to find similarities and differences in information makes it perfect for exploratory data analysis, cross-selling strategies, customer segmentation, and image recognition.

Common Unsupervised Learning Approaches

无监督学习模型用于以下三个主要任务:聚类、建立连接和降低维度。下面,我们将介绍学习方法和常用算法 -

Unsupervised learning models are used for three main tasks: clustering, making connections, and reducing the number of dimensions. Below, we’ll describe learning methods and common algorithms used −

Clustering - 聚类是一种数据挖掘方法,可根据相似性或差异性对未标记数据进行组织。聚类技术用于根据数据中的结构或模式将未分类、未经处理的数据项组织到组中。聚类算法有很多类型,包括排他、重叠、层次和概率。

Clustering − Clustering is a method for data mining that organises unlabelled data based on their similarities or differences. Clustering techniques are used to organise unclassified, unprocessed data items into groups according to structures or patterns in the data. There are many types of clustering algorithms, including exclusive, overlapping, hierarchical, and probabilistic.

K-means Clustering 是聚类方法的一个流行示例,其中数据点根据到每个组的质心的距离分配到 K 组。最接近某个质心的数据点将被归入同一类别。较高的 K 值表示具有更多粒度的较小组,而较低的 K 值表示具有较少粒度的较大组。K 均值聚类的常见应用包括市场细分、文档聚类、图像分割和图像压缩。

K-means Clustering is a popular example of an clustering approach in which data points are allocated to K groups based on their distance from each group’s centroid. The data points closest to a certain centroid will be grouped into the same category. A higher K number indicates smaller groups with more granularity, while a lower K value indicates bigger groupings with less granularity. Common applications of K-means clustering include market segmentation, document clustering, picture segmentation, and image compression.

Dimensionality Reduction - 尽管更多的数据通常会产生更准确的发现,但它也可能影响机器学习算法的有效性(例如,过拟合)并使数据集难以可视化。降维是一种在数据集具有过多特征或维度时使用的策略。它将数据输入量减少到可管理的水平,同时尽可能保持数据集的完整性。降维通常应用于数据预处理阶段,有很多方法,其中之一就是 -

Dimensionality Reduction − Although more data typically produces more accurate findings, it may also affect the effectiveness of machine learning algorithms (e.g., overfitting) and make it difficult to visualize datasets. Dimensionality reduction is a strategy used when a dataset has an excessive number of characteristics or dimensions. It decreases the quantity of data inputs to a manageable level while retaining the integrity of the dataset to the greatest extent feasible. Dimensionality reduction is often employed in the data pre-processing phase, and there are a number of approaches, one of them is −

Principal Component Analysis (PCA) - 这是通过特征提取消除冗余和压缩数据集的降维方法。此方法采用线性变换来生成新的数据表示,从而产生一组“主成分”。第一个主成分是使方差最大化的数据集方向。尽管第二个主成分同样在数据中找到了最大的方差,但它与第一个完全不相关,从而产生了与第一个正交的方向。此过程根据维度的数量重复,下一个主分量是与最可变前一个分量正交的方向。

Principal Component Analysis (PCA) − It is a dimensionality reduction approach used to remove redundancy and compress datasets through feature extraction. This approach employs a linear transformation to generate a new data representation, resulting in a collection of "principal components." The first principal component is the dataset direction that maximises variance. Although the second principal component similarly finds the largest variance in the data, it is fully uncorrelated with the first, resulting in a direction that is orthogonal to the first. This procedure is repeated dependent on the number of dimensions, with the next main component being the direction orthogonal to the most variable preceding components.

Reinforcement Learning

强化学习 (RL) 是一种机器学习,它允许代理通过反复试验和利用其自身行为和经验的反馈在交互式环境中学习。

Reinforcement Learning (RL) is a type of machine learning that allows an agent to learn in an interactive setting via trial and error utilising feedback from its own actions and experiences.

Key terms in reinforcement learning

一些描述 RL 问题基本组件的重要概念有 -

Some significant concepts describing the fundamental components of an RL issue are −

-

Environment − The physical surroundings in which an agent functions

-

Condition − The current standing of the agent

-

Reward − Environment-based feed-back

-

Policy − Mapping between agent state and actions

-

Value − The future compensation an agent would obtain for doing an action in a given condition.

Data Science Vs Machine Learning

数据科学是对数据的研究以及如何从中得出有意义的见解,而机器学习是对使用数据来提高性能或为预测提供信息的模型的研究和开发。机器学习是人工智能的一个子领域。

Data Science is the study of data and how to derive meaningful insights from it, while machine learning is the study and development of models that use data to enhance performance or inform predictions. Machine learning is a subfield of artificial intelligence.

近年来,机器学习和人工智能 (AI) 已开始主导数据科学的部分领域,在数据分析和商业智能中发挥着至关重要的作用。机器学习通过使用模型和算法收集和分析有关特定人群的巨量数据,自动执行数据分析并根据这些数据进行预测。数据科学和机器学习是相关的,但并不相同。

In recent years, machine learning and artificial intelligence (AI) have come to dominate portions of data science, playing a crucial role in data analytics and business intelligence. Machine learning automates data analysis and makes predictions based on the collection and analysis of massive volumes of data about certain populations using models and algorithms. Data Science and machine learning are related to each other, but not identical.

数据科学是一个广阔的领域,涵盖从数据中获取见解和信息的所有方面。它涉及收集、清理、分析和解释大量数据,以发现可能指导业务决策的模式、趋势和见解。

Data Science is a vast field that incorporates all aspects of deriving insights and information from data. It involves gathering, cleaning, analysing, and interpreting vast amount of data to discover patterns, trends, and insights that may guide business choices.

机器学习是数据科学的一个子领域,它专注于开发可以从数据中学习并根据其获取的知识进行预测或判断的算法。机器学习算法旨在通过获取新知识自动随着时间的推移提高其性能。

Machine learning is a subfield of data science that focuses on the development of algorithms that can learn from data and make predictions or judgements based on their acquired knowledge. Machine learning algorithms are meant to enhance their performance automatically over time by acquiring new knowledge.

换句话说,数据科学包含机器学习作为其众多方法之一。机器学习是数据分析和预测的有力工具,但它只是整个数据科学的一个子领域。

In other words, data science encompasses machine learning as one of its numerous methodologies. Machine learning is a strong tool for data analysis and prediction, but it is just a subfield of data science as a whole.

下面是对比表,以清晰理解。

Given below is the table of comparison for a clear understanding.

Data Science |

Machine Learning |

Data Science is a broad field that involves the extraction of insights and knowledge from large and complex datasets using various techniques, including statistical analysis, machine learning, and data visualization. |

Machine learning is a subset of data science that involves defining and developing algorithms and models that enable machines to learn from data and make predictions or decisions without being explicitly programmed. |

Data Science focuses on understanding the data, identifying patterns and trends, and extracting insights to support decision-making. |

Machine learning, on the other hand, focuses on building predictive models and making decisions based on the learned patterns. |

Data Science includes a wide range of techniques, such as data cleaning, data integration, data exploration, statistical analysis, data visualization, and machine learning. |

Machine learning, on the other hand, primarily focuses on building predictive models using algorithms such as regression, classification, and clustering. |

Data Science typically requires large and complex datasets that require significant processing and cleaning to derive insights. |

Machine learning, on the other hand, requires labelled data that can be used to train algorithms and models. |

Data Science requires skills in statistics, programming, and data visualization, as well as domain knowledge in the area being studied. |

Machine learning requires a strong understanding of algorithms, programming, and mathematics, as well as a knowledge of the specific application area. |

Data Science techniques can be used for a variety of purposes beyond prediction, such as clustering, anomaly detection, and data visualization |

Machine learning algorithms are primarily focused on making predictions or decisions based on data |

Data Science often relies on statistical methods to analyze data, |

Machine learning relies on algorithms to make predictions or decisions. |

Data Science - Data Analysis

What is Data Analysis in Data Science?

数据分析是数据科学的关键组成部分之一。数据分析被描述为一个清理、转换和建模数据的过程,以获得可操作的商业智能。它使用统计和计算方法来从大量数据中获取见解并提取信息。数据分析的目标是从数据中提取相关信息,并基于此知识做出决策。