Chat Completion API

聊天完成 API 为开发者提供了将 AI 驱动的聊天完成能力集成到其应用程序中的能力。它充分利用了预先训练好的语言模型,例如 GPT(生成式预训练变换器)来生成类似于人类的响应,以自然语言的形式响应用户输入。

The Chat Completion API offers developers the ability to integrate AI-powered chat completion capabilities into their applications. It leverages pre-trained language models, such as GPT (Generative Pre-trained Transformer), to generate human-like responses to user inputs in natural language.

该 API 通常通过向 AI 模型发送提示或部分会话来工作,然后根据其训练数据和对自然语言模式的理解来生成对话的完成或延续。然后将完成的响应返回给应用程序,应用程序可以将其呈现给用户或用于进一步处理。

The API typically works by sending a prompt or partial conversation to the AI model, which then generates a completion or continuation of the conversation based on its training data and understanding of natural language patterns. The completed response is then returned to the application, which can present it to the user or use it for further processing.

Spring AI Chat Completion API 被设计为一个简单且可移植的接口,用于与各种 AI Models 交互,且能让开发人员在不同的模型之间进行切换而只需进行最小的代码更改。此设计符合 Spring 的模块化和可互换性理念。

The Spring AI Chat Completion API is designed to be a simple and portable interface for interacting with various AI Models, allowing developers to switch between different models with minimal code changes.

This design aligns with Spring’s philosophy of modularity and interchangeability.

此外,通过使用诸如 Prompt(用于输入封装)和 ChatResponse(用于输出处理)的配套类,聊天完成 API 统一了与 AI 模型之间的通信。其管理着请求准备和响应解析的复杂性,从而提供了直接且简化的 API 交互。

Also with the help of companion classes like Prompt for input encapsulation and ChatResponse for output handling, the Chat Completion API unifies the communication with AI Models.

It manages the complexity of request preparation and response parsing, offering a direct and simplified API interaction.

API Overview

此部分提供了 Spring AI 聊天完成 API 接口和关联类的指南。

This section provides a guide to the Spring AI Chat Completion API interface and associated classes.

ChatClient

以下是 ChatClient 接口定义:

Here is the ChatClient interface definition:

public interface ChatClient extends ModelClient<Prompt, ChatResponse> {

default String call(String message) {// implementation omitted

}

@Override

ChatResponse call(Prompt prompt);

}带有 String 参数的 call 方法简化了初始使用过程,避免了更复杂的 Prompt 和 ChatResponse 类的复杂性。在实际应用中,更常见的是使用采用 Prompt 实例并返回 ChatResponse 的 call 方法。

The call method with a String parameter simplifies initial use, avoiding the complexities of the more sophisticated Prompt and ChatResponse classes.

In real-world applications, it is more common to use the call method that takes a Prompt instance and returns an ChatResponse.

StreamingChatClient

以下是 StreamingChatClient 接口定义:

Here is the StreamingChatClient interface definition:

public interface StreamingChatClient extends StreamingModelClient<Prompt, ChatResponse> {

@Override

Flux<ChatResponse> stream(Prompt prompt);

}stream 方法采用与 ChatClient 类似的 Prompt 请求,但它使用反应式 Flux API 对响应流式传输。

The stream method takes a Prompt request similar to ChatClient but it streams the responses using the reactive Flux API.

Prompt

The Prompt is a ModelRequest that encapsulates a list of Message objects and optional model request options.

The following listing shows a truncated version of the Prompt class, excluding constructors and other utility methods:

public class Prompt implements ModelRequest<List<Message>> {

private final List<Message> messages;

private ChatOptions modelOptions;

@Override

public ChatOptions getOptions() {..}

@Override

public List<Message> getInstructions() {...}

// constructors and utility methods omitted

}Message

Message 接口封装了一个文本消息,一系列作为 Map 的属性,以及称为 MessageType 的分类。该接口的定义如下:

The Message interface encapsulates a textual message, a collection of attributes as a Map, and a categorization known as MessageType. The interface is defined as follows:

public interface Message {

String getContent();

Map<String, Object> getProperties();

MessageType getMessageType();

}Message 接口有各种实现,它们对应于 AI 模型可以处理的消息类别。某些模型(如 OpenAI 的聊天完成端点)基于会话角色区分消息类别,实际上是通过 MessageType 映射的。

The Message interface has various implementations that correspond to the categories of messages that an AI model can process.

Some models, like OpenAI’s chat completion endpoint, distinguish between message categories based on conversational roles, effectively mapped by the MessageType.

例如,OpenAI 识别出用于诸如 system、user、function 或 assistant 等不同会话角色的消息类别。

For instance, OpenAI recognizes message categories for distinct conversational roles such as system,user, function or assistant.

虽然术语 MessageType 可能暗示特定的消息格式,但在这种情况下,它实际上指定了消息在对话中所扮演的角色。

While the term MessageType might imply a specific message format, in this context it effectively designates the role a message plays in the dialogue.

对于不使用特定角色的 AI 模型,UserMessage 实现充当一个标准类别,通常表示用户生成的查询或指令。要理解实际应用以及 Prompt 和 Message 之间的关系,尤其是在这些角色或消息类别的上下文中,请参阅 Prompts 部分的详细说明。

For AI models that do not use specific roles, the UserMessage implementation acts as a standard category, typically representing user-generated inquiries or instructions.

To understand the practical application and the relationship between Prompt and Message, especially in the context of these roles or message categories, see the detailed explanations in the Prompts section.

Chat Options

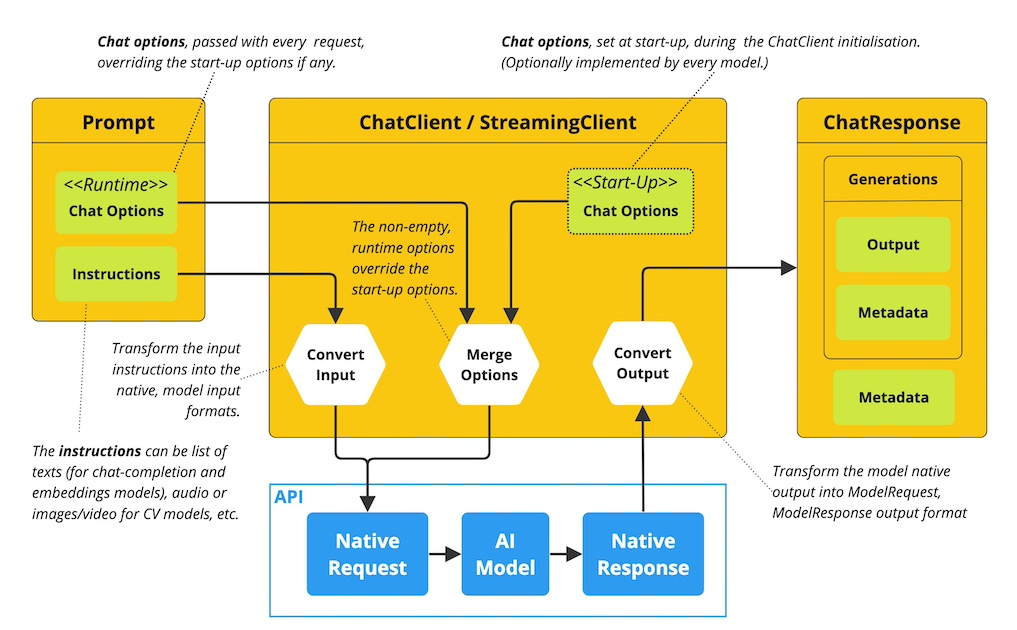

表示可以传递给 AI 模型的选项。ChatOptions 类是 ModelOptions 的子类,用于定义一些可传递给 AI 模型的可移植选项。ChatOptions 类定义如下:

Represents the options that can be passed to the AI model. The ChatOptions class is a subclass of ModelOptions and is used to define few portable options that can be passed to the AI model.

The ChatOptions class is defined as follows:

public interface ChatOptions extends ModelOptions {

Float getTemperature();

void setTemperature(Float temperature);

Float getTopP();

void setTopP(Float topP);

Integer getTopK();

void setTopK(Integer topK);

}此外,每个特定模型的 ChatClient/StreamingChatClient 实现可以拥有自己的选项,这些选项可以传递给 AI 模型。例如,OpenAI 聊天完成模型具有自己的选项,如 presencePenalty、frequencyPenalty、bestOf 等。

Additionally, every model specific ChatClient/StreamingChatClient implementation can have its own options that can be passed to the AI model. For example, the OpenAI Chat Completion model has its own options like presencePenalty, frequencyPenalty, bestOf etc.

这是一个强大的功能,开发者在启动应用程序时可以使用特定于模型的选项,然后通过 Prompt 请求在运行时覆盖这些选项:

This is a powerful feature that allows developers to use model specific options when starting the application and then override them with at runtime using the Prompt request:

ChatResponse

ChatResponse 类的结构如下:

The structure of the ChatResponse class is as follows:

public class ChatResponse implements ModelResponse<Generation> {

private final ChatResponseMetadata chatResponseMetadata;

private final List<Generation> generations;

@Override

public ChatResponseMetadata getMetadata() {...}

@Override

public List<Generation> getResults() {...}

// other methods omitted

}ChatResponse 类持有 AI 模型的输出,每个 Generation 实例都包含由一个提示生成的一个多个可能输出之一。

The ChatResponse class holds the AI Model’s output, with each Generation instance containing one of potentially multiple outputs resulting from a single prompt.

ChatResponse 类还包含关于人工智能模型响应的 ChatResponseMetadata 元数据。

The ChatResponse class also carries a ChatResponseMetadata metadata about the AI Model’s response.

Generation

最后, Generation 类从 ModelResult 中扩展而来,以表示输出助手消息响应以及有关此结果的元数据:

Finally, the Generation class extends from the ModelResult to represent the output assistant message response and related metadata about this result:

public class Generation implements ModelResult<AssistantMessage> {

private AssistantMessage assistantMessage;

private ChatGenerationMetadata chatGenerationMetadata;

@Override

public AssistantMessage getOutput() {...}

@Override

public ChatGenerationMetadata getMetadata() {...}

// other methods omitted

}Available Implementations

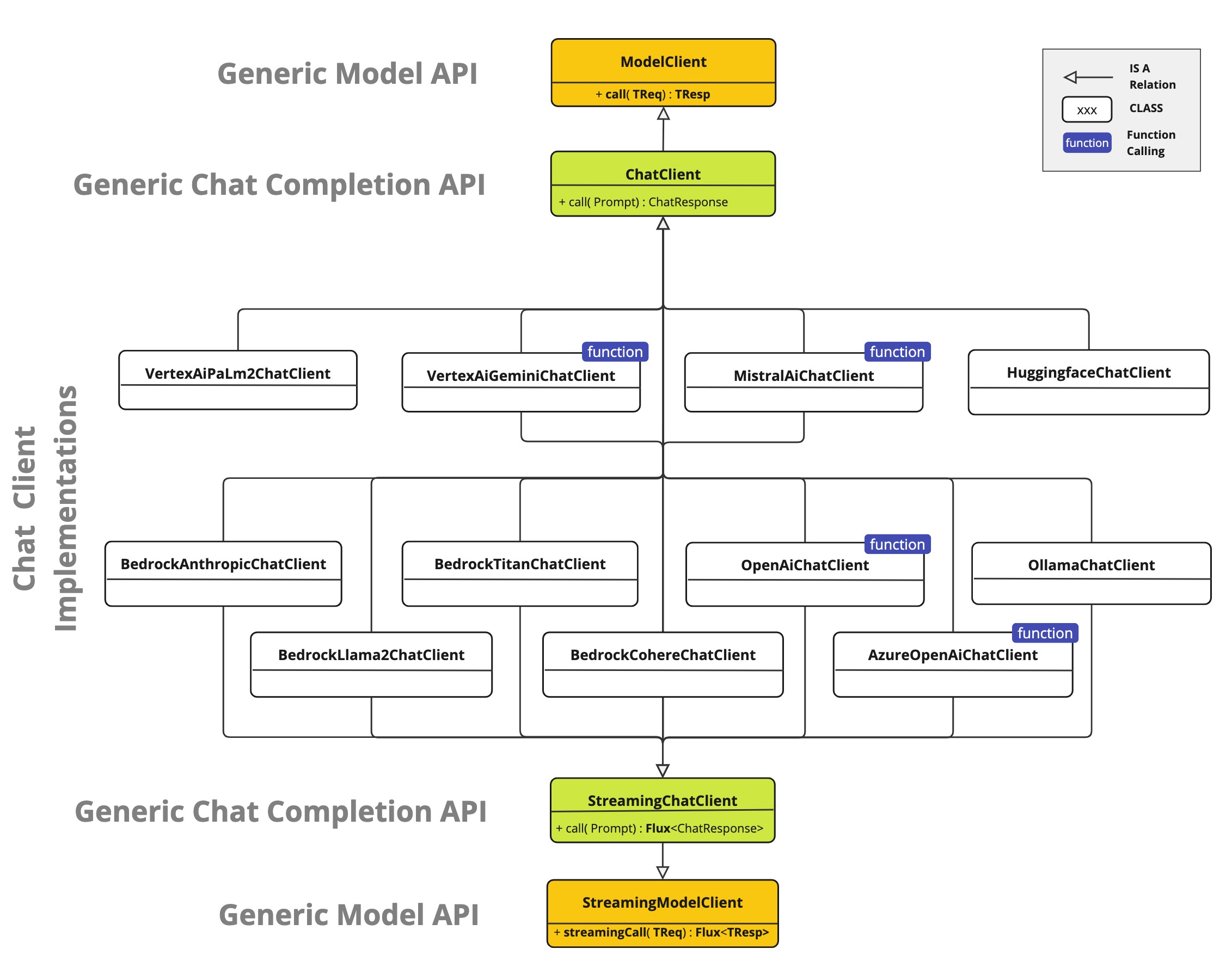

为以下模型提供者提供了 ChatClient 和 StreamingChatClient 实现:

The ChatClient and StreamingChatClient implementations are provided for the following Model providers:

-

OpenAI Chat Completion (streaming & function-calling support)

-

Microsoft Azure Open AI Chat Completion (streaming & function-calling support)

-

HuggingFace Chat Completion (no streaming support)

-

Google Vertex AI PaLM2 Chat Completion (no streaming support)

-

Google Vertex AI Gemini Chat Completion (streaming, multi-modality & function-calling support)

-

Mistral AI Chat Completion (streaming & function-calling support)

Chat Model API

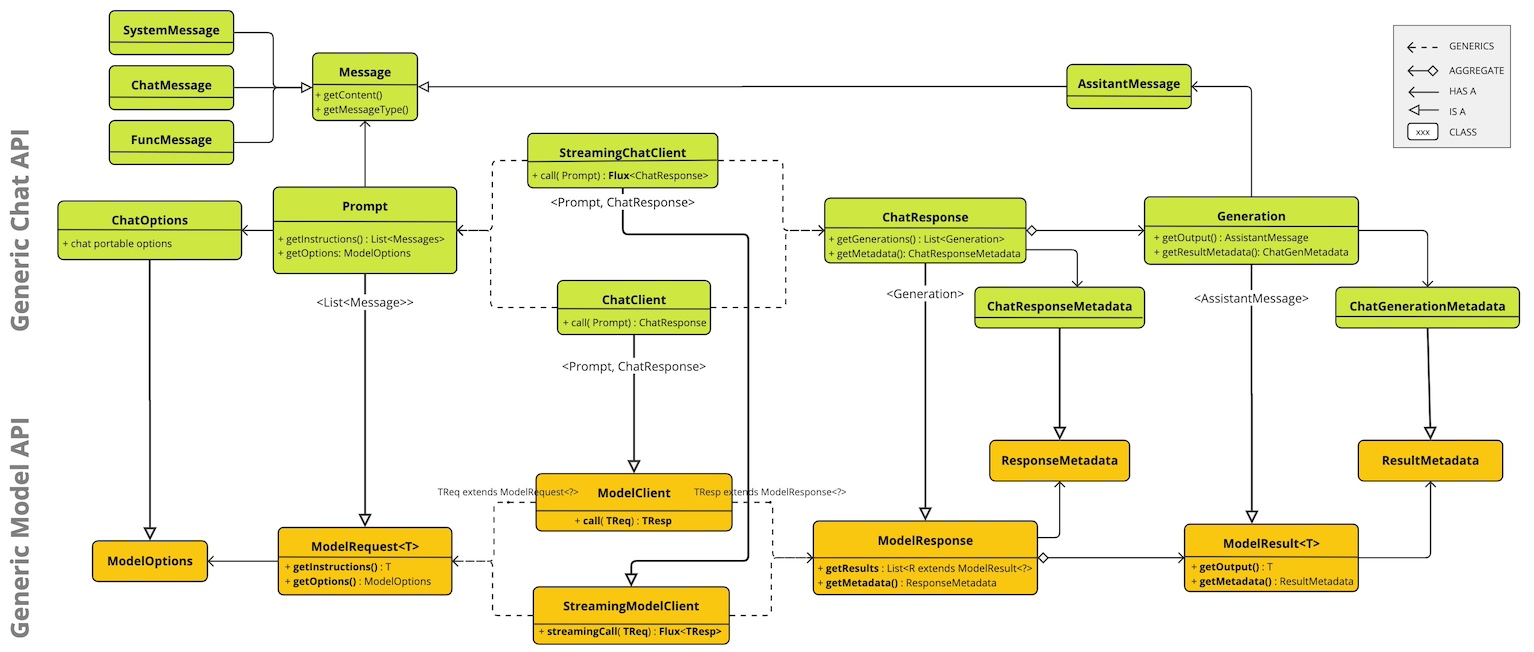

Spring AI 聊天完成 API 构建在 Spring AI 通用模型 API 之上,提供特定的聊天抽象和实现。下面的类图说明了 Spring AI 聊天完成 API 的主要类和接口。

The Spring AI Chat Completion API is build on top of the Spring AI Generic Model API providing Chat specific abstractions and implementations. Following class diagram illustrates the main classes and interfaces of the Spring AI Chat Completion API.