Meta-Data Schema

Overview

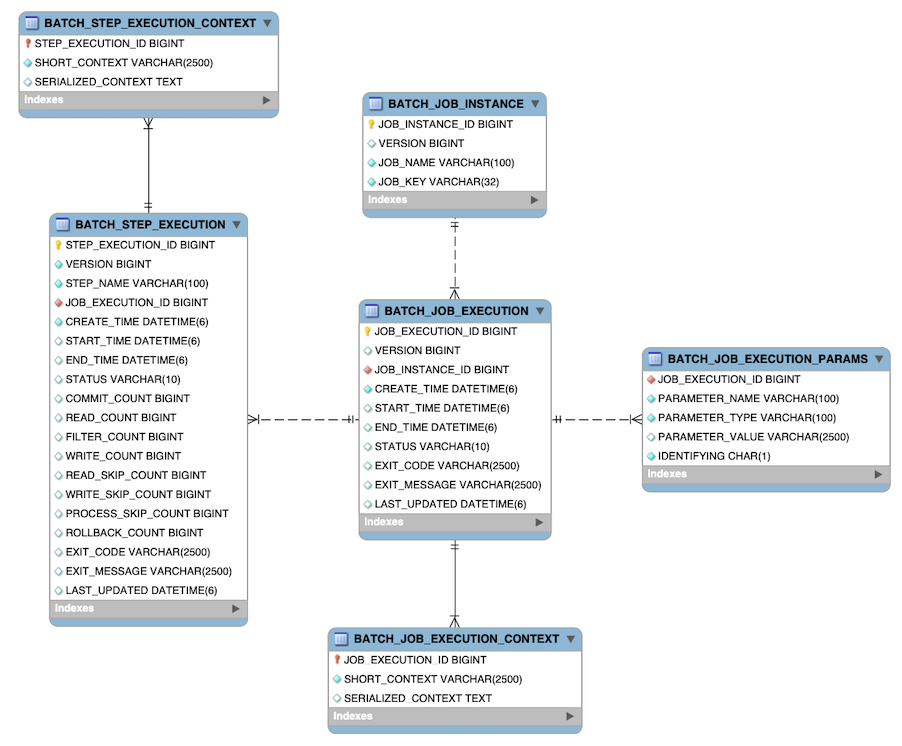

Spring Batch 元数据表与在 Java 中表示它们的域对象非常匹配。例如,JobInstance、JobExecution、JobParameters 和 StepExecution 分别映射到 BATCH_JOB_INSTANCE、BATCH_JOB_EXECUTION、BATCH_JOB_EXECUTION_PARAMS 和 BATCH_STEP_EXECUTION。ExecutionContext 映射到 BATCH_JOB_EXECUTION_CONTEXT 和 BATCH_STEP_EXECUTION_CONTEXT。JobRepository 负责将每个 Java 对象保存和存储到其正确的表中。此附录详细描述了元数据表,以及在创建它们时做出的许多设计决策。在查看本附录中稍后描述的各个表创建语句时,请注意所使用的数据类型尽可能通用。Spring Batch 提供了许多架构作为示例。由于各个数据库供应商处理数据类型的方式不同,因此所有这些架构都具有不同的数据类型。下图显示了所有六个表及其相互关系的 ERD 模型:

The Spring Batch Metadata tables closely match the domain objects that represent them in

Java. For example, JobInstance, JobExecution, JobParameters, and StepExecution

map to BATCH_JOB_INSTANCE, BATCH_JOB_EXECUTION, BATCH_JOB_EXECUTION_PARAMS, and

BATCH_STEP_EXECUTION, respectively. ExecutionContext maps to both

BATCH_JOB_EXECUTION_CONTEXT and BATCH_STEP_EXECUTION_CONTEXT. The JobRepository is

responsible for saving and storing each Java object into its correct table. This appendix

describes the metadata tables in detail, along with many of the design decisions that

were made when creating them. When viewing the various table creation statements described

later in this appendix, note that the data types used are as generic as possible. Spring

Batch provides many schemas as examples. All of them have varying data types, due to

variations in how individual database vendors handle data types. The following image

shows an ERD model of all six tables and their relationships to one another:

Example DDL Scripts

Spring Batch Core JAR 文件包含示例脚本,以便为多个数据库平台创建关系表(这些平台又按照顺序由 jobrepository 工厂 Bean 或名称空间等效项自动检测)。这些脚本可以照原样使用,或者根据需要使用附加索引和约束进行修改。文件名采用表单 schema-.sql,其中 是目标数据库平台的简称。这些脚本位于包 org.springframework.batch.core 中。

The Spring Batch Core JAR file contains example scripts to create the relational tables

for a number of database platforms (which are, in turn, auto-detected by the job

repository factory bean or namespace equivalent). These scripts can be used as is or

modified with additional indexes and constraints, as desired. The file names are in the

form schema-.sql, where is the short name of the target database platform.

The scripts are in the package org.springframework.batch.core.

Migration DDL Scripts

Spring Batch 提供了在升级版本时需要执行的迁移 DDL 脚本。这些脚本可以在核心 Jar 文件中的 org/springframework/batch/core/migration 下找到。迁移脚本组织成与它们被引用的版本号对应的文件夹:

Spring Batch provides migration DDL scripts that you need to execute when you upgrade versions.

These scripts can be found in the Core Jar file under org/springframework/batch/core/migration.

Migration scripts are organized into folders corresponding to version numbers in which they were introduced:

-

2.2: Contains scripts you need to migrate from a version before2.2to version2.2 -

4.1: Contains scripts you need to migrate from a version before4.1to version4.1

Version

此附录中讨论的许多数据库表都包含一个版本列。此列非常重要,因为 Spring Batch 在处理数据库更新时采用乐观锁定策略。这意味着,每次记录被“接触”(更新)时,版本列中的值将递增 1。当存储库返回保存值时,如果版本号已更改,它将抛出 OptimisticLockingFailureException,表示并发访问出错。此检查是必要的,因为即使不同批处理作业可能在不同的机器上运行,它们都使用相同的数据库表。

Many of the database tables discussed in this appendix contain a version column. This

column is important, because Spring Batch employs an optimistic locking strategy when

dealing with updates to the database. This means that each time a record is “touched”

(updated), the value in the version column is incremented by one. When the repository goes

back to save the value, if the version number has changed, it throws an

OptimisticLockingFailureException, indicating that there has been an error with concurrent

access. This check is necessary, since, even though different batch jobs may be running

in different machines, they all use the same database tables.

Identity

BATCH_JOB_INSTANCE、BATCH_JOB_EXECUTION 和 BATCH_STEP_EXECUTION 都包含以 _ID 结尾的列。这些字段充当其各自表的主键。但是,它们不是数据库生成的关键字段。更确切地说,它们是由单独序列生成的。这是必需的,因为将其中一个域对象插入数据库后,给定的键需要设置在实际对象上,以便可以在 Java 中唯一识别它们。较新的数据库驱动程序(JDBC 3.0 及更高版本)支持具有数据库生成键的此功能。但是,序列用于无需此功能,模式的每个变体都包含以下语句的某种形式:

BATCH_JOB_INSTANCE, BATCH_JOB_EXECUTION, and BATCH_STEP_EXECUTION each contain

columns ending in _ID. These fields act as primary keys for their respective tables.

However, they are not database generated keys. Rather, they are generated by separate

sequences. This is necessary because, after inserting one of the domain objects into the

database, the key it is given needs to be set on the actual object so that they can be

uniquely identified in Java. Newer database drivers (JDBC 3.0 and up) support this

feature with database-generated keys. However, rather than require that feature,

sequences are used. Each variation of the schema contains some form of the following

statements:

CREATE SEQUENCE BATCH_STEP_EXECUTION_SEQ;

CREATE SEQUENCE BATCH_JOB_EXECUTION_SEQ;

CREATE SEQUENCE BATCH_JOB_SEQ;许多数据库供应商不支持序列。在这些情况下,将使用以下陈述等解决方法来解决此问题,例如以下适用于 MySQL 的语句:

Many database vendors do not support sequences. In these cases, work-arounds are used, such as the following statements for MySQL:

CREATE TABLE BATCH_STEP_EXECUTION_SEQ (ID BIGINT NOT NULL) type=InnoDB;

INSERT INTO BATCH_STEP_EXECUTION_SEQ values(0);

CREATE TABLE BATCH_JOB_EXECUTION_SEQ (ID BIGINT NOT NULL) type=InnoDB;

INSERT INTO BATCH_JOB_EXECUTION_SEQ values(0);

CREATE TABLE BATCH_JOB_SEQ (ID BIGINT NOT NULL) type=InnoDB;

INSERT INTO BATCH_JOB_SEQ values(0);在前面的情况下,表用于代替每个序列。Spring 核心类 MySQLMaxValueIncrementer 然后递增此序列中的一列以提供类似的功能。

In the preceding case, a table is used in place of each sequence. The Spring core class,

MySQLMaxValueIncrementer, then increments the one column in this sequence to

give similar functionality.

The BATCH_JOB_INSTANCE Table

BATCH_JOB_INSTANCE 表保存与 JobInstance 相关的所有信息,并作为整个层次结构的顶端。以下通用 DDL 语句用于创建它:

The BATCH_JOB_INSTANCE table holds all information relevant to a JobInstance and

serves as the top of the overall hierarchy. The following generic DDL statement is used

to create it:

CREATE TABLE BATCH_JOB_INSTANCE (

JOB_INSTANCE_ID BIGINT PRIMARY KEY ,

VERSION BIGINT,

JOB_NAME VARCHAR(100) NOT NULL ,

JOB_KEY VARCHAR(32) NOT NULL

);以下列表描述表中的每个列:

The following list describes each column in the table:

-

JOB_INSTANCE_ID: The unique ID that identifies the instance. It is also the primary key. The value of this column should be obtainable by calling thegetIdmethod onJobInstance. -

VERSION: See Version. -

JOB_NAME: Name of the job obtained from theJobobject. Because it is required to identify the instance, it must not be null. -

JOB_KEY: A serialization of theJobParametersthat uniquely identifies separate instances of the same job from one another. (JobInstanceswith the same job name must have differentJobParametersand, thus, differentJOB_KEYvalues).

The BATCH_JOB_EXECUTION_PARAMS Table

BATCH_JOB_EXECUTION_PARAMS 表保存与 JobParameters 对象相关的所有信息。它包含传给 Job 的 0 个或更多键/值对,并作为记录运行作业的参数。对于有助于生成作业标识的每个参数,IDENTIFYING 标志设置为 true。请注意,表已被反规范化。与其为每种类型创建单独表,而是创建一个表,其中包含指示类型的列,如下所示:

The BATCH_JOB_EXECUTION_PARAMS table holds all information relevant to the

JobParameters object. It contains 0 or more key/value pairs passed to a Job and

serves as a record of the parameters with which a job was run. For each parameter that

contributes to the generation of a job’s identity, the IDENTIFYING flag is set to true.

Note that the table has been denormalized. Rather than creating a separate table for each

type, there is one table with a column indicating the type, as the following

listing shows:

CREATE TABLE BATCH_JOB_EXECUTION_PARAMS (

JOB_EXECUTION_ID BIGINT NOT NULL ,

PARAMETER_NAME VARCHAR(100) NOT NULL ,

PARAMETER_TYPE VARCHAR(100) NOT NULL ,

PARAMETER_VALUE VARCHAR(2500) ,

IDENTIFYING CHAR(1) NOT NULL ,

constraint JOB_EXEC_PARAMS_FK foreign key (JOB_EXECUTION_ID)

references BATCH_JOB_EXECUTION(JOB_EXECUTION_ID)

);以下列表描述每列:

The following list describes each column:

-

JOB_EXECUTION_ID: Foreign key from theBATCH_JOB_EXECUTIONtable that indicates the job execution to which the parameter entry belongs. Note that multiple rows (that is, key/value pairs) may exist for each execution. -

PARAMETER_NAME: The parameter name.

-

PARAMETER_TYPE: The fully qualified name of the type of the parameter.

-

PARAMETER_VALUE: Parameter value

-

IDENTIFYING: Flag indicating whether the parameter contributed to the identity of the related

JobInstance.

请注意,此表没有主键。这是因为框架无法使用它,因此不需要它。如果需要,可以添加具有数据库生成键的主键,而不会对框架本身造成任何问题。

Note that there is no primary key for this table. This is because the framework has no use for one and, thus, does not require it. If need be, you can add a primary key with a database generated key without causing any issues to the framework itself.

The BATCH_JOB_EXECUTION Table

BATCH_JOB_EXECUTION 表保存与 JobExecution 对象相关的所有信息。每次运行 Job 时,总有一个名为 JobExecution 的新 JobExecution 以及此表中的一个新行。以下列表显示了 BATCH_JOB_EXECUTION 表的定义:

The BATCH_JOB_EXECUTION table holds all information relevant to the JobExecution

object. Every time a Job is run, there is always a new called JobExecution and a new row in

this table. The following listing shows the definition of the BATCH_JOB_EXECUTION

table:

CREATE TABLE BATCH_JOB_EXECUTION (

JOB_EXECUTION_ID BIGINT PRIMARY KEY ,

VERSION BIGINT,

JOB_INSTANCE_ID BIGINT NOT NULL,

CREATE_TIME TIMESTAMP NOT NULL,

START_TIME TIMESTAMP DEFAULT NULL,

END_TIME TIMESTAMP DEFAULT NULL,

STATUS VARCHAR(10),

EXIT_CODE VARCHAR(20),

EXIT_MESSAGE VARCHAR(2500),

LAST_UPDATED TIMESTAMP,

constraint JOB_INSTANCE_EXECUTION_FK foreign key (JOB_INSTANCE_ID)

references BATCH_JOB_INSTANCE(JOB_INSTANCE_ID)

) ;以下列表描述每列:

The following list describes each column:

-

JOB_EXECUTION_ID: Primary key that uniquely identifies this execution. The value of this column is obtainable by calling thegetIdmethod of theJobExecutionobject. -

VERSION: See Version. -

JOB_INSTANCE_ID: Foreign key from theBATCH_JOB_INSTANCEtable. It indicates the instance to which this execution belongs. There may be more than one execution per instance. -

CREATE_TIME: Timestamp representing the time when the execution was created. -

START_TIME: Timestamp representing the time when the execution was started. -

END_TIME: Timestamp representing the time when the execution finished, regardless of success or failure. An empty value in this column when the job is not currently running indicates that there has been some type of error and the framework was unable to perform a last save before failing. -

STATUS: Character string representing the status of the execution. This may beCOMPLETED,STARTED, and others. The object representation of this column is theBatchStatusenumeration. -

EXIT_CODE: Character string representing the exit code of the execution. In the case of a command-line job, this may be converted into a number. -

EXIT_MESSAGE: Character string representing a more detailed description of how the job exited. In the case of failure, this might include as much of the stack trace as is possible. -

LAST_UPDATED: Timestamp representing the last time this execution was persisted.

The BATCH_STEP_EXECUTION Table

BATCH_STEP_EXECUTION 表保存与 StepExecution 对象相关的所有信息。此表在许多方面与 BATCH_JOB_EXECUTION 表相似,并且为每个 JobExecution 总是有至少一个 Step 条目。以下列表显示了 BATCH_STEP_EXECUTION 表的定义:

The BATCH_STEP_EXECUTION table holds all information relevant to the StepExecution

object. This table is similar in many ways to the BATCH_JOB_EXECUTION table, and there

is always at least one entry per Step for each JobExecution created. The following

listing shows the definition of the BATCH_STEP_EXECUTION table:

CREATE TABLE BATCH_STEP_EXECUTION (

STEP_EXECUTION_ID BIGINT NOT NULL PRIMARY KEY ,

VERSION BIGINT NOT NULL,

STEP_NAME VARCHAR(100) NOT NULL,

JOB_EXECUTION_ID BIGINT NOT NULL,

CREATE_TIME TIMESTAMP NOT NULL,

START_TIME TIMESTAMP DEFAULT NULL ,

END_TIME TIMESTAMP DEFAULT NULL,

STATUS VARCHAR(10),

COMMIT_COUNT BIGINT ,

READ_COUNT BIGINT ,

FILTER_COUNT BIGINT ,

WRITE_COUNT BIGINT ,

READ_SKIP_COUNT BIGINT ,

WRITE_SKIP_COUNT BIGINT ,

PROCESS_SKIP_COUNT BIGINT ,

ROLLBACK_COUNT BIGINT ,

EXIT_CODE VARCHAR(20) ,

EXIT_MESSAGE VARCHAR(2500) ,

LAST_UPDATED TIMESTAMP,

constraint JOB_EXECUTION_STEP_FK foreign key (JOB_EXECUTION_ID)

references BATCH_JOB_EXECUTION(JOB_EXECUTION_ID)

) ;以下列表描述每列:

The following list describes each column:

-

STEP_EXECUTION_ID: Primary key that uniquely identifies this execution. The value of this column should be obtainable by calling thegetIdmethod of theStepExecutionobject. -

VERSION: See Version. -

STEP_NAME: The name of the step to which this execution belongs. -

JOB_EXECUTION_ID: Foreign key from theBATCH_JOB_EXECUTIONtable. It indicates theJobExecutionto which thisStepExecutionbelongs. There may be only oneStepExecutionfor a givenJobExecutionfor a givenStepname. -

START_TIME: Timestamp representing the time when the execution was started. -

END_TIME: Timestamp representing the time the when execution was finished, regardless of success or failure. An empty value in this column, even though the job is not currently running, indicates that there has been some type of error and the framework was unable to perform a last save before failing. -

STATUS: Character string representing the status of the execution. This may beCOMPLETED,STARTED, and others. The object representation of this column is theBatchStatusenumeration. -

COMMIT_COUNT: The number of times in which the step has committed a transaction during this execution. -

READ_COUNT: The number of items read during this execution. -

FILTER_COUNT: The number of items filtered out of this execution. -

WRITE_COUNT: The number of items written and committed during this execution. -

READ_SKIP_COUNT: The number of items skipped on read during this execution. -

WRITE_SKIP_COUNT: The number of items skipped on write during this execution. -

PROCESS_SKIP_COUNT: The number of items skipped during processing during this execution. -

ROLLBACK_COUNT: The number of rollbacks during this execution. Note that this count includes each time rollback occurs, including rollbacks for retry and those in the skip recovery procedure. -

EXIT_CODE: Character string representing the exit code of the execution. In the case of a command-line job, this may be converted into a number. -

EXIT_MESSAGE: Character string representing a more detailed description of how the job exited. In the case of failure, this might include as much of the stack trace as is possible. -

LAST_UPDATED: Timestamp representing the last time this execution was persisted.

The BATCH_JOB_EXECUTION_CONTEXT Table

BATCH_JOB_EXECUTION_CONTEXT 表保存与某个 Job 的 ExecutionContext 相关的所有信息。对于每个 JobExecution 都有一个 Job ExecutionContext,它包含特定作业执行所需的所有作业级别数据。此数据通常表示故障后必须检索的状态,以便 JobInstance 可以“从中断处继续”。以下列表显示了 BATCH_JOB_EXECUTION_CONTEXT 表的定义:

The BATCH_JOB_EXECUTION_CONTEXT table holds all information relevant to the

ExecutionContext of a Job. There is exactly one Job ExecutionContext for each

JobExecution, and it contains all of the job-level data that is needed for a particular

job execution. This data typically represents the state that must be retrieved after a

failure, so that a JobInstance can “start where it left off”. The following

listing shows the definition of the BATCH_JOB_EXECUTION_CONTEXT table:

CREATE TABLE BATCH_JOB_EXECUTION_CONTEXT (

JOB_EXECUTION_ID BIGINT PRIMARY KEY,

SHORT_CONTEXT VARCHAR(2500) NOT NULL,

SERIALIZED_CONTEXT CLOB,

constraint JOB_EXEC_CTX_FK foreign key (JOB_EXECUTION_ID)

references BATCH_JOB_EXECUTION(JOB_EXECUTION_ID)

) ;以下列表描述每列:

The following list describes each column:

-

JOB_EXECUTION_ID: Foreign key representing theJobExecutionto which the context belongs. There may be more than one row associated with a given execution. -

SHORT_CONTEXT: A string version of theSERIALIZED_CONTEXT. -

SERIALIZED_CONTEXT: The entire context, serialized.

The BATCH_STEP_EXECUTION_CONTEXT Table

BATCH_STEP_EXECUTION_CONTEXT 表保存与 Step 的 ExecutionContext 相关的所有信息。对于每个 StepExecution 都有一个 ExecutionContext,它包含特定步骤执行需要保留的所有数据。此数据通常表示故障后必须检索的状态,以便 JobInstance 可以“从中断处继续”。以下列表显示了 BATCH_STEP_EXECUTION_CONTEXT 表的定义:

The BATCH_STEP_EXECUTION_CONTEXT table holds all information relevant to the

ExecutionContext of a Step. There is exactly one ExecutionContext per

StepExecution, and it contains all of the data that

needs to be persisted for a particular step execution. This data typically represents the

state that must be retrieved after a failure so that a JobInstance can “start

where it left off”. The following listing shows the definition of the

BATCH_STEP_EXECUTION_CONTEXT table:

CREATE TABLE BATCH_STEP_EXECUTION_CONTEXT (

STEP_EXECUTION_ID BIGINT PRIMARY KEY,

SHORT_CONTEXT VARCHAR(2500) NOT NULL,

SERIALIZED_CONTEXT CLOB,

constraint STEP_EXEC_CTX_FK foreign key (STEP_EXECUTION_ID)

references BATCH_STEP_EXECUTION(STEP_EXECUTION_ID)

) ;以下列表描述每列:

The following list describes each column:

-

STEP_EXECUTION_ID: Foreign key representing theStepExecutionto which the context belongs. There may be more than one row associated with a given execution. -

SHORT_CONTEXT: A string version of theSERIALIZED_CONTEXT. -

SERIALIZED_CONTEXT: The entire context, serialized.

Archiving

因为每次运行批处理作业时都会在多个表中创建条目,所以为元数据表创建归档策略很常见。这些表本身旨在显示过去发生的事情的记录,并且通常不会影响任何作业的运行,除了与重启相关的几个值得注意的例外情况:

Because there are entries in multiple tables every time a batch job is run, it is common to create an archive strategy for the metadata tables. The tables themselves are designed to show a record of what happened in the past and generally do not affect the run of any job, with a few notable exceptions pertaining to restart:

-

The framework uses the metadata tables to determine whether a particular

JobInstancehas been run before. If it has been run and if the job is not restartable, an exception is thrown. -

If an entry for a

JobInstanceis removed without having completed successfully, the framework thinks that the job is new rather than a restart. -

If a job is restarted, the framework uses any data that has been persisted to the

ExecutionContextto restore theJob’sstate. Therefore, removing any entries from this table for jobs that have not completed successfully prevents them from starting at the correct point if they are run again.

International and Multi-byte Characters

如果你在你的业务处理中使用多字节字符集(如中文或西里尔文),这些字符可能需要保留在 Spring Batch 模式中。许多用户发现,仅仅将模式更改为两倍 VARCHAR`列的长度就足够了。其他人更喜欢将 JobRepository配置为 `max-varchar-length`的一半。`VARCHAR`列长度值。一些用户还报告说他们在模式定义中使用 `NVARCHAR`代替 `VARCHAR。最佳结果取决于数据库平台和数据库服务器在本地配置的方式。

If you use multi-byte character sets (such as Chinese or Cyrillic) in your business

processing, those characters might need to be persisted in the Spring Batch schema.

Many users find that simply changing the schema to double the length of the VARCHAR

columns is enough. Others prefer to configure the

JobRepository with max-varchar-length half the

value of the VARCHAR column length. Some users have also reported that they use

NVARCHAR in place of VARCHAR in their schema definitions. The best result depends on

the database platform and the way the database server has been configured locally.

Recommendations for Indexing Metadata Tables

Spring Batch 为核心 jar 文件中的几个常用数据库平台提供元数据表的 DDL 样本。该 DDL 中不包含索引声明,因为存在太多关于用户如何进行索引的变体,具体取决于其精确平台、本地约定以及作业操作的业务需求。下表提供了一些指示,说明 Spring Batch 提供的 DAO 实现将在 WHERE 子句中使用哪些列以及可能使用它们的频率,以便各个项目可以决定自己的索引:

Spring Batch provides DDL samples for the metadata tables in the core jar file for

several common database platforms. Index declarations are not included in that DDL,

because there are too many variations in how users may want to index, depending on their

precise platform, local conventions, and the business requirements of how the jobs are

operated. The following table provides some indication as to which columns are going to

be used in a WHERE clause by the DAO implementations provided by Spring Batch and how

frequently they might be used so that individual projects can make up their own minds

about indexing:

Default Table Name |

Where Clause |

Frequency |

|

|

Every time a job is launched |

|

|

Every time a job is restarted |

|

|

On commit interval, a.k.a. chunk (and at start and end of step) |

|

|

Before each step execution |